AWS Glue Data Catalog (Hive Metastore)

AWS Glue Data Catalog is a fully managed, serverless data catalog service provided by Amazon Web Services. It enables users to discover, search, and manage metadata for their data assets across various data sources. With Glue Metastore, you can easily integrate and process data from multiple sources using AWS data services such as Amazon Redshift, Amazon Athena, and Amazon EMR.

Cloud Provider Support

- AWS: ✅ Supported

- GCP: Not supported

Setup guide

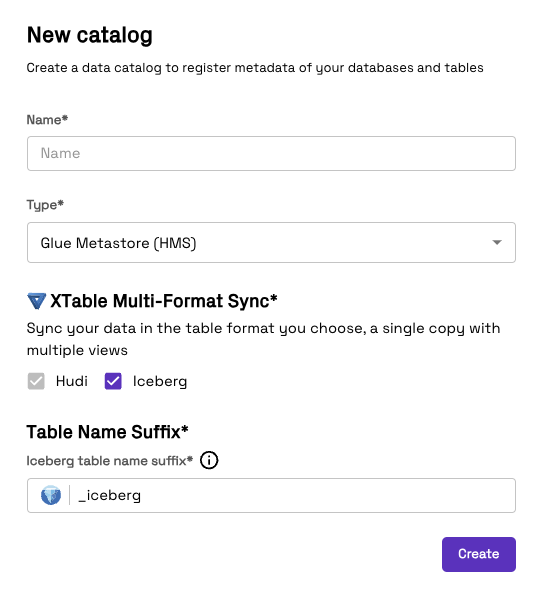

- Enter a Name to identify the data catalog in Onehouse

- Specify the AWS region of the Glue catalog

- Optionally, specify the IAM role to use for accessing the Glue catalog in a separate AWS account

- Create the Glue catalog integration

Sync additional table formats

Onehouse natively supports syncing to the Glue Data Catalog in multiple open table formats.

Tables are always synced in the Apache Hudi format by default. You may additionally sync tables as Apache Iceberg using Apache XTable. This means that a single copy of your data will now be synced to the Glue Catalog in both the Apache Hudi and Apache Iceberg formats, enabling you to use the best format for your use-case.

In order to set this up, select the formats that you would like to sync as and define the format suffix for the table name (Iceberg format will default to _iceberg). Thus any Iceberg format tables will be registered as tableName_iceberg in the Glue Catalog. An example of this can be found in the table below.

| Format | Table Name (in catalog) |

|---|---|

| Apache Hudi (default) | tableName_ro (read optimized view) tableName_rt (real time or snapshot view) |

| Apache Iceberg | tableName_iceberg |

Onehouse managed Iceberg tables should not be written to via external writers - this could corrupt the data in the table

Glue Data Catalog integrations can only be created in AWS projects.

Sync across AWS accounts

You may sync to Glue catalogs in a different or account from your Onehouse project. Follow the steps below to create an IAM role with access to the Glue catalog for Onehouse to use, then pass the IAM role into the catalog integration configuration.

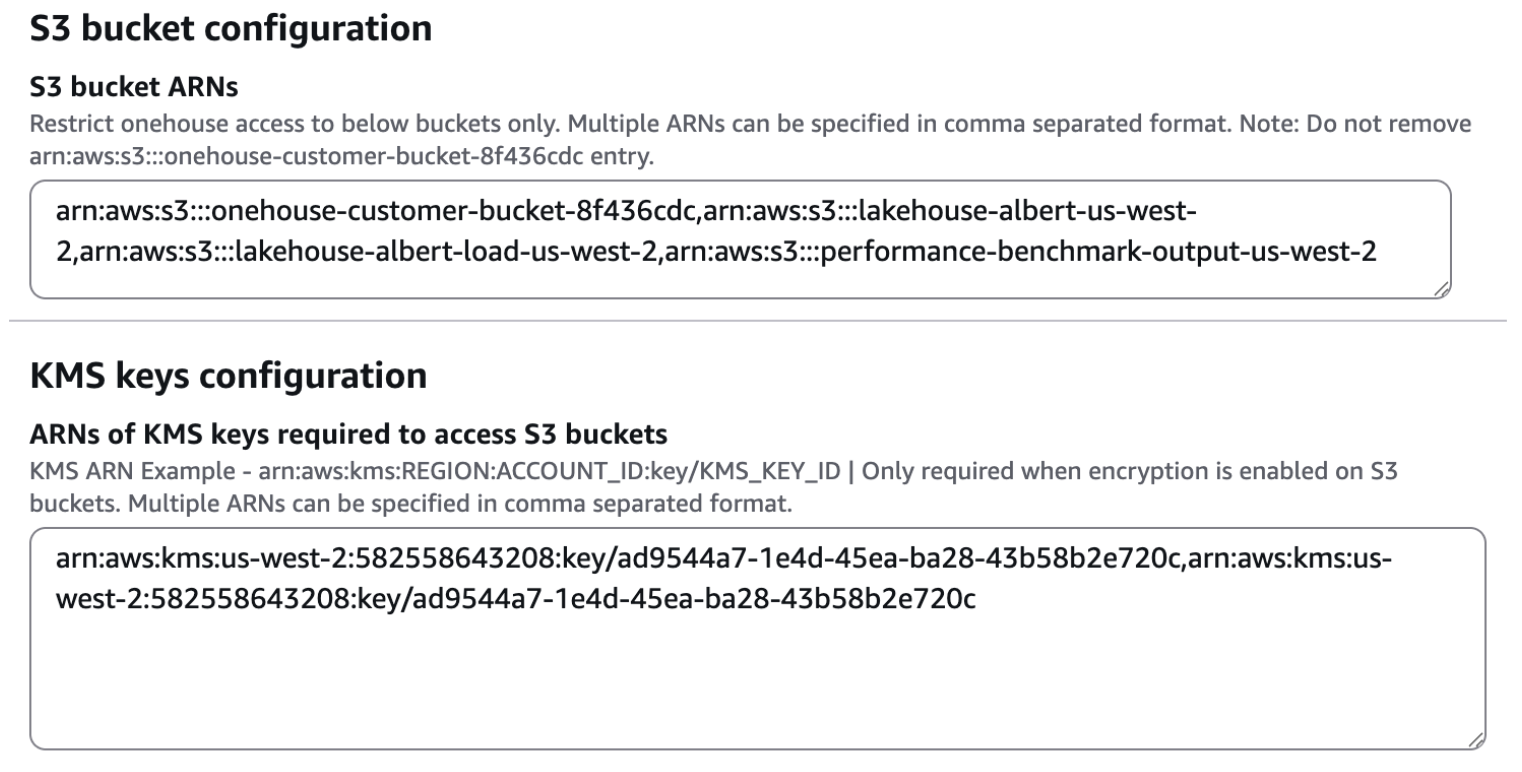

Step 1: Add your S3 bucket and KMS Keys (if required) to the Onehouse account permissions

Add the S3 buckets used for data sources. Add the KMS key ARNs used for data sources.

- CloudFormation example:

- Terraform example:

Step 2: Add your data lake AWS account permissions

Glue catalog

On the resource policy of the Glue Catalog in your Data Lake account, under the Catalog Settings menu, set the following trust policy, replacing the following variables:

onehouse_account_id: The AWS account ID of the account hosting your Onehouse clusterrequest_id_prefix: The first 8 characters of the Request ID of your Onehouse accountglue_account_id: The AWS account ID of the account hosting your Glue Catalogaws_region: the AWS region of your Glue Catalog

arn:aws:iam::${onehouse_account_id}:role/abcdefg -> arn:aws:iam::123456789000:role/abcdefg

{

"Version" : "2012-10-17",

"Statement" : [

{

"Sid" : "GlueAllowOnehouseAcme",

"Effect" : "Allow",

"Principal" : {

"AWS" : [

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-eks-node-role-${request_id_prefix}",

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-core-role-${request_id_prefix}"

]

},

"Action" : [

"glue:GetDatabase",

"glue:GetDatabases",

"glue:GetTable",

"glue:GetTables",

"glue:GetTableVersion",

"glue:GetTableVersions",

"glue:GetPartition",

"glue:GetPartitions",

"glue:BatchGetPartition",

"glue:GetUserDefinedFunction",

"glue:GetUserDefinedFunctions",

"glue:SearchTables",

"glue:CreateDatabase",

"glue:UpdateDatabase",

"glue:DeleteDatabase",

"glue:CreateTable",

"glue:UpdateTable",

"glue:DeleteTable",

"glue:DeleteTableVersion",

"glue:BatchDeleteTableVersion",

"glue:CreatePartition",

"glue:BatchCreatePartition",

"glue:UpdatePartition",

"glue:BatchUpdatePartition",

"glue:DeletePartition",

"glue:BatchDeletePartition",

"glue:CreateUserDefinedFunction",

"glue:UpdateUserDefinedFunction",

"glue:DeleteUserDefinedFunction"

],

"Resource" : [

"arn:aws:glue:${aws_region}:${glue_account_id}:catalog",

"arn:aws:glue:${aws_region}:${glue_account_id}:database/*",

"arn:aws:glue:${aws_region}:${glue_account_id}:table/*",

"arn:aws:glue:${aws_region}:${glue_account_id}:table/*/*"

]

}

]

}

This permission policy allows read-only access to your Glue data catalog and all databases and tables. If you wish to restrict access to specific tables, specify those tables instead of using wildcard (*) values.

S3 buckets

For each S3 bucket being used as a data source in your Data Lake account, under the Permissions tab, and Bucket Policy menu, set the following trust policy, replacing the following variables:

- onehouse_account_id: The AWS account ID of the account hosting your Onehouse cluster

- request_id_prefix: The first 8 characters of the Request ID of your Onehouse account

- bucket_name: The name of the S3 bucket

arn:aws:iam::${onehouse_account_id}:role/abcdefg -> arn:aws:iam::123456789000:role/abcdefg

{

"Version" : "2012-10-17",

"Statement" : [

{

"Sid": "AccessToDataSourceBucket",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-eks-node-role-${request_id_prefix}",

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-core-role-${request_id_prefix}"

]

},

"Action": [

"s3:GetBucketNotification",

"s3:PutBucketNotification",

"s3:ListBucket",

"s3:ListBucketVersions",

"s3:GetBucketVersioning"

],

"Resource": "arn:aws:s3:::${bucket_name}"

},

{

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-eks-node-role-${request_id_prefix}",

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-core-role-${request_id_prefix}"

]

},

"Action": [

"s3:GetObject",

"s3:GetObjectVersionAttributes",

"s3:GetObjectRetention",

"s3:GetObjectAttributes",

"s3:GetObjectTagging",

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": "arn:aws:s3:::${bucket_name}/*"

}

]

}

KMS keys

If you use customer-managed keys for S3 bucket encryption in your Data Lake account, for each KMS key, Switch to Policy View.

Set the following trust policy, replacing the following variables:

- onehouse_account_id: The AWS account ID of the account hosting your Onehouse cluster

- request_id_prefix: The first 8 characters of the Request ID of your Onehouse account

- bucket_name: The name of the S3 bucket(s)

arn:aws:iam::${onehouse_account_id}:role/abcdefg -> arn:aws:iam::123456789000:role/abcdefg

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-eks-node-role-${request_id_prefix}",

"arn:aws:iam::${onehouse_account_id}:role/onehouse-customer-core-role-${request_id_prefix}"

]

},

"Action": [

"kms:Decrypt",

"kms:GenerateKey"

],

"Resource": [

"${bucket_name}"

]

}

Step 3: Add the Catalog in Onehouse

In the Onehouse console (or via API), create a Glue catalog. Specify the Catalog ARN and region:

- Region - The region of the Glue catalog. Eg. 'us-east-1'

- Catalog ARN - The ARN of the Glue catalog. Eg. 'arn:aws:glue:us-east-1:123456789012:catalog'

For Lake Formation integrated projects

If you are using Lake Formation with your Glue Data Catalog, your Onehouse role must have the necessary permissions to access the Glue Data Catalog resources.

High-level steps

The role that is responsible to access the Glue resources is onehouse-customer-eks-node-role-<requestId-prefix>. This role is created when you deploy Onehouse in your account.

- In your Lake Formation console, go to the

Data permissionstab. - Use the node role as the

Principalto grant permissions to theNamed Data Catalog resources. - Choose the appropriate

Catalog,Databases, andTablesresources to grant permissions to. - For flexibility, you may provide

Superpermissions to the role. This will allow Onehouse to access all resources in the Glue Data Catalog for the chosen resources. - At the very minimum, you will need to provide the following permissions to the role for the resources you want Onehouse to access.

| Offering | Resource | Permissions |

|---|---|---|

| Observed Lake | Database | Create Table, Alter, Describe |

| Table | Select, Insert, Describe, Alter, Drop | |

| Managed Lake | Database | Create Table, Alter, Describe, Drop |

| Table | Select, Insert, Describe, Alter, Drop, Delete |

Sample Input