Monitor Job Runs

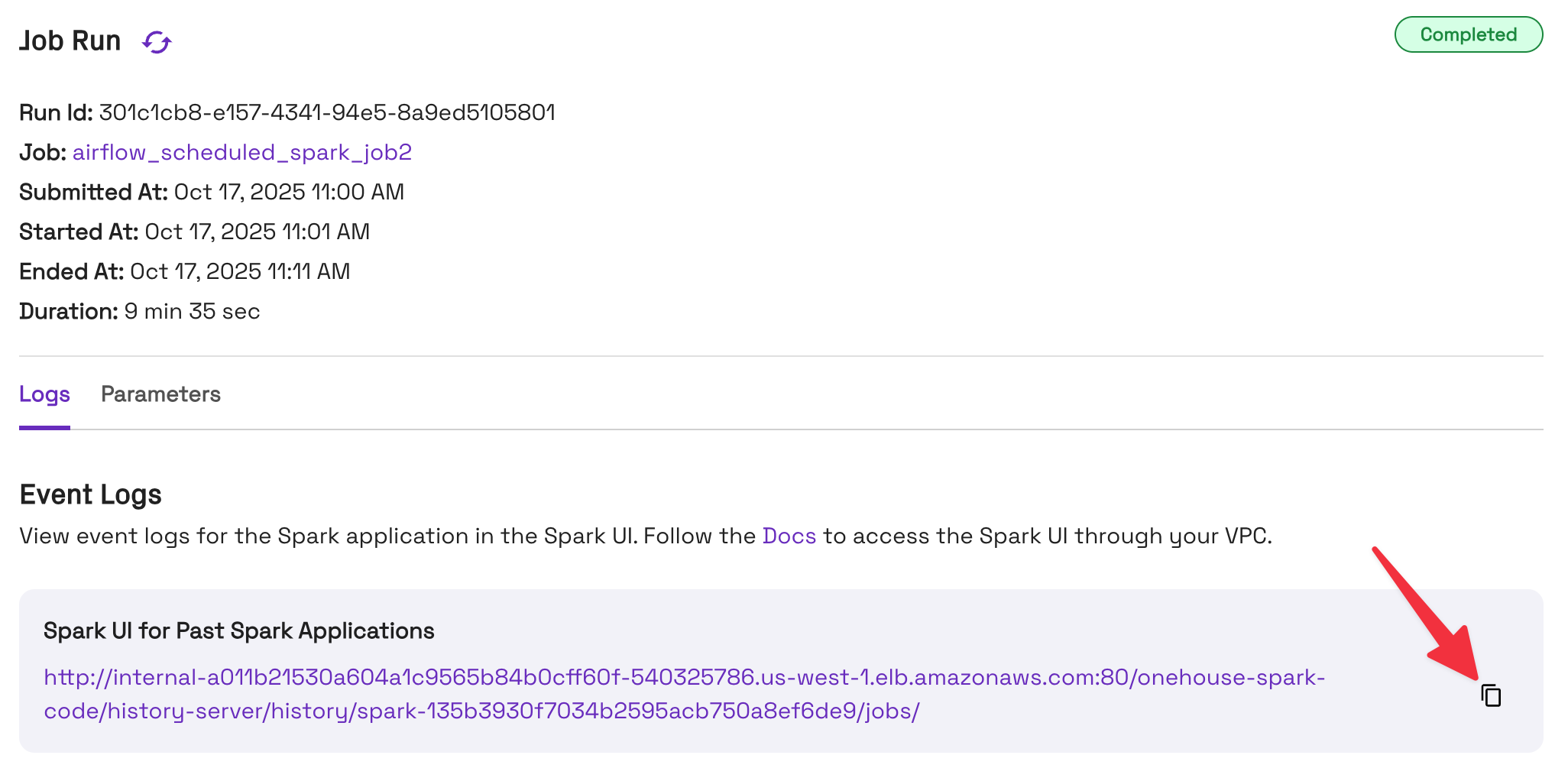

You can view the Event Logs and Apache Spark Driver Logs for any given Job run. In the Onehouse console, open the Jobs page, select a Job, then select the Job run you want to view.

Event Logs (Spark UI)

Event logs for the Apache Spark application are available in the Apache Spark Web UI (aka Spark UI). Follow these steps to access the Spark UI.

Event logs are retained for 7 days after the Job run is completed or terminated.

Open the Spark UI

- Open your Job run in the Onehouse console.

- Under the Logs tab, find the Event Logs section. Copy the event logs URL to access the Spark UI.

- The Spark UI is exposed on an internal load balancer, accessible from within your VPC. Follow these steps to connect to the internal load balancer.

- Note: If public access is enabled, you can skip this step. For projects with public access enabled, the event logs URL will start with

https://dp-apps.cloud.onehouse.ai/.

- Note: If public access is enabled, you can skip this step. For projects with public access enabled, the event logs URL will start with

- After you've connected, enter the event logs URL in your browser to open the Spark UI.

- If you used port forwarding in the previous step, replace the host in the URL with the

localhostforwarded port you configured.

- If you used port forwarding in the previous step, replace the host in the URL with the

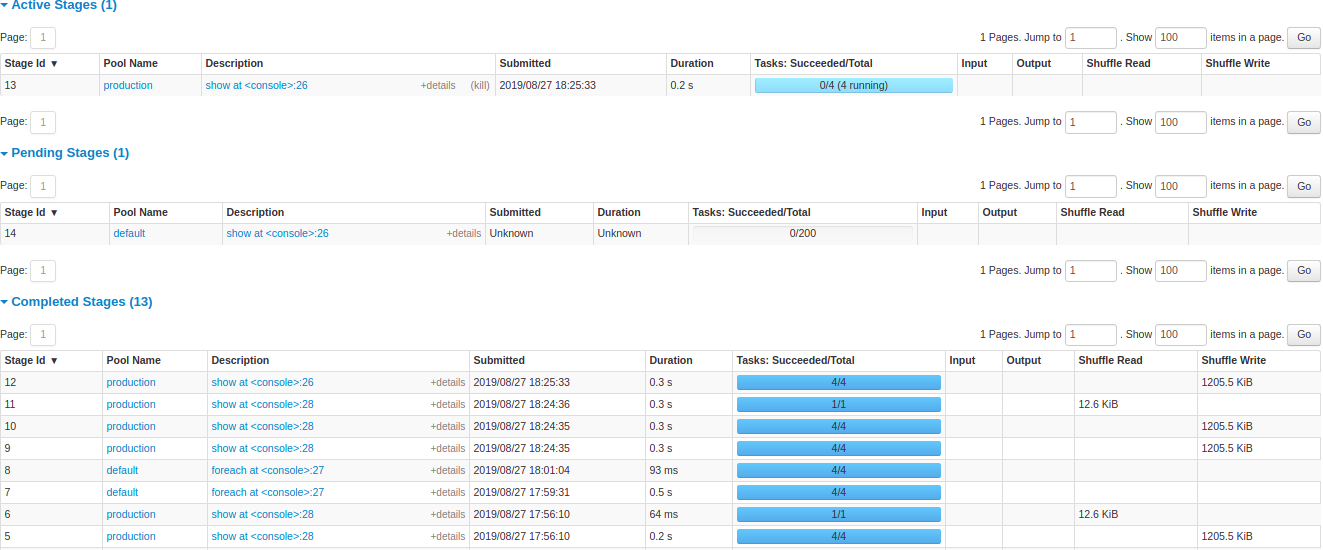

- View your Job run details in the Spark UI.

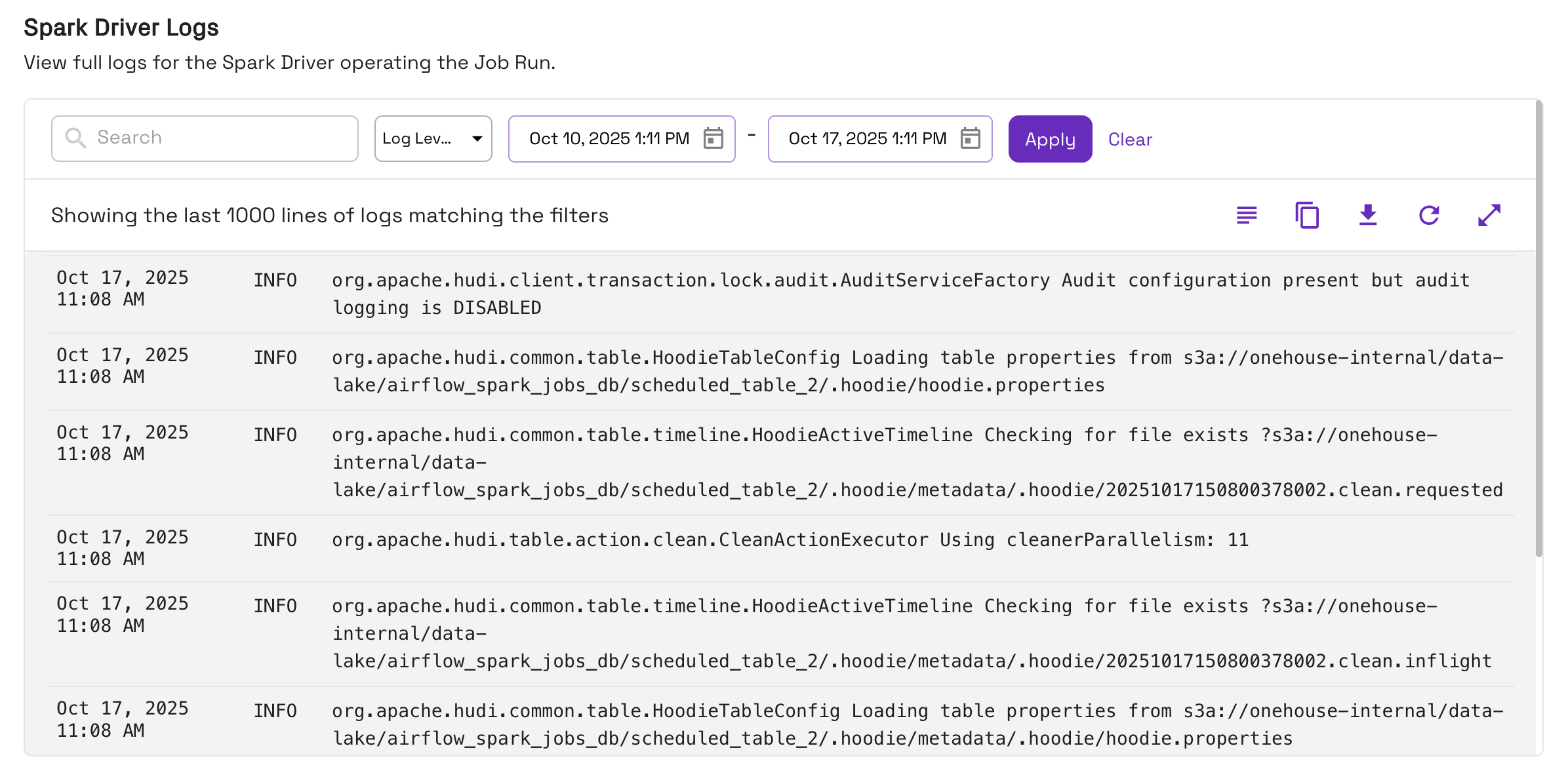

Driver Logs

View driver logs in the Onehouse console

You can view the Apache Spark driver logs for any Job run. In the Onehouse console, open the Jobs page, select a Job, then select the Job run you want to view.

Configure custom driver logs

By default, Onehouse will generate driver logs with Apache Log4j. You can also configure additional custom logs to appear in the Onehouse console.

JAR Jobs

Import the org.apache.logging.log4j.Logger package and follow the Apache Log4j instructions to generate additional log events in your Job code.

Python Jobs

- Set up the

LoggerProviderclass in the Job Code:from pyspark.sql import SparkSession

from typing import Optional

class LoggerProvider:

def get_logger(self, spark: SparkSession, custom_prefix: Optional[str] = ""):

log4j_logger = spark._jvm.org.apache.log4j # noqa

return log4j_logger.LogManager.getLogger(custom_prefix + self.__full_name__())

def __full_name__(self):

klass = self.__class__

module = klass.__module__

if module == "__builtin__":

return klass.__name__ # avoid outputs like '__builtin__.str'

return module + "." + klass.__name__ - Ensure the class that runs your Apache Spark Job inherits the

LoggerProviderclass. - Output logs using

self.logger:class SampleApp(LoggerProvider):

def __init__(self):

self.spark = SparkSession.builder \

.appName("SampleApp") \

.getOrCreate()

self.logger = self.get_logger(self.spark)

def run(self):

self.logger.info("Starting the application")

# Main code

self.spark.stop()

if __name__ == "__main__":

app = SampleApp()

app.run()