Create a Python Job

Python Jobs in Onehouse allow you to run a PySpark application.

Python Job Quickstart

Prerequisites

- Use Python 3.12 for the best compatibility.

- Ensure you have the Project Admin role. This is currently a requirement for creating Jobs.

- Install Docker with buildx (included in v19.03+). You will need this to build a virtual environment on the same architecture as the instances running your Jobs.

- A Cluster with the 'Spark' type to run the Jobs. You will need access to an existing Cluster in your project, or create one by following these steps:

- In the Onehouse console, navigate to the Clusters page.

- Click 'Add new Cluster'.

- Select 'Spark' as the Cluster type.

Step 1: Create your Python script and package dependencies

Create your Python script and install the package dependencies, then upload to object storage accessible by Onehouse.

Step 1a: Download the quickstart bundle

This quickstart includes a PySpark script, requirements.txt and Dockerfile to help you run a simple PySpark Job and build all the package dependencies into a virtual environment.

Download the demo PySpark application: 📎 PySpark.zip.

- In your local environment, unzip PySpark.zip, then navigate to the

PySparkparent directory.

Step 1b: Create the virtual environment (venv)

- Find the architecture for the instance type running on the Cluster you created in Onehouse.

oh-generalfamily instances on AWS use the arm64 architecture.oh-generalfamily instances on GCP use the amd64 architecture.

- Ensure you have a

Dockerfileandrequirements.txtin your working directory. If you're not using the quickstart bundle, create aDockerfilewith the following content:# syntax=docker/dockerfile:1.7

FROM ubuntu:24.04 AS builder

ARG TARGETARCH

ENV DEBIAN_FRONTEND=noninteractive \

PIP_NO_CACHE_DIR=1 \

PYTHONDONTWRITEBYTECODE=1 \

PYTHONUNBUFFERED=1

RUN apt-get update && apt-get install -y --no-install-recommends \

python3 python3-venv python3-pip python3-dev \

build-essential ca-certificates && \

rm -rf /var/lib/apt/lists/*

WORKDIR /workspace

COPY requirements.txt .

# Create venv and install deps

RUN python3 -m venv /opt/venv && \

/opt/venv/bin/pip install --upgrade pip "setuptools>=68.0.0" "wheel>=0.40.0" && \

/opt/venv/bin/pip install -r requirements.txt

# Install venv-pack and pack the venv

RUN /opt/venv/bin/pip install venv-pack && \

/opt/venv/bin/python -m venv_pack -p /opt/venv -o /workspace/venv.tar.gz

RUN echo "Built for arch: ${TARGETARCH}"

# Export stage to extract the tarball without running a container

FROM scratch AS export

COPY --from=builder /workspace/venv.tar.gz /venv.tar.gznoteThis Dockerfile uses a multi-stage build to create a portable virtual environment. The

exportstage outputs only thevenv.tar.gzfile, keeping the build artifacts separate from the final output. - Use docker buildx to produce a venv containing all the packages from your

requirements.txtfile. Run the command for your Cluster's architecture:# arm64

docker buildx build --platform linux/arm64 --target export --output type=local,dest=. .

# amd64

docker buildx build --platform linux/amd64 --target export --output type=local,dest=. . - The command should create a file

venv.tar.gzin your current working directory.

Step 1c: Upload your Python script and venv to a bucket

- Upload

sales_data.pyandvenv.tar.gzto an object storage bucket accessible by Onehouse.tipOnehouse will always have access to the bucket named

onehouse-customer-bucket-<request_id_prefix>. You can retrieve the request ID for your project by clicking on the settings popup in the bottom left corner of the Onehouse console.

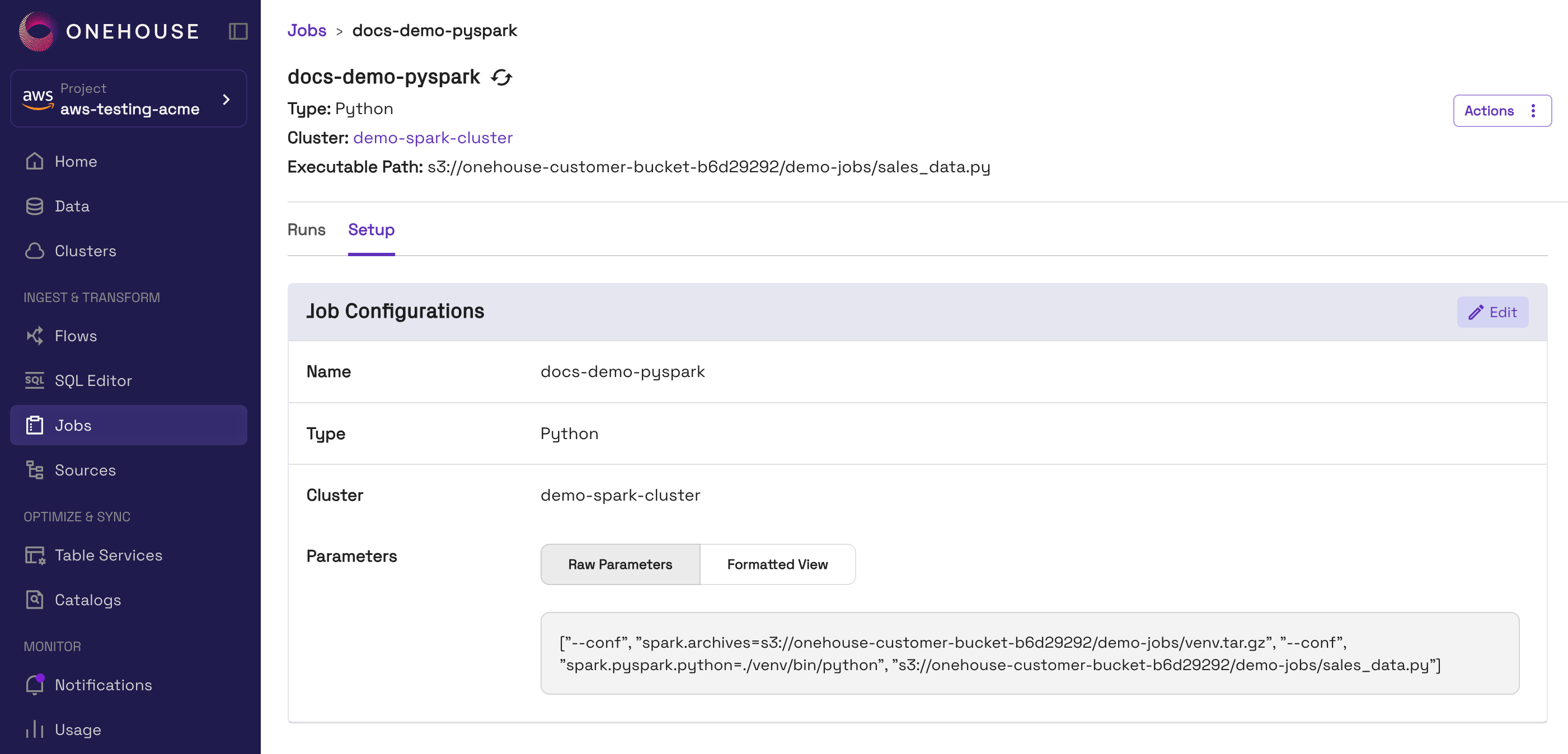

Step 2: Create the Job in Onehouse

- First, ensure you have the Project Admin role. This is currently a requirement for creating Jobs.

- In the Onehouse console, open Jobs and click Create Job.

- Configure the Job by filling in the following fields:

- Name: Specify a unique name to identify the Job.

- Type: Select 'Python'.

- Parameters: Specify parameters for the Job, including the executable filepath and the architecture-specific venv file you created. Ensure you follow the Python Job parameters guidelines. You can copy-paste the following parameters for the

PySparkexample, replacing both paths with your own:["--conf", "spark.archives=s3://onehouse-customer-bucket-12345/demo-jobs/venv.tar.gz#environment", "--conf", "spark.pyspark.python=./environment/bin/python", "s3://onehouse-customer-bucket-12345/demo-jobs/sales_data.py"]

- Create the Job. This will not immediately run the Job.

Step 3: Run the Job

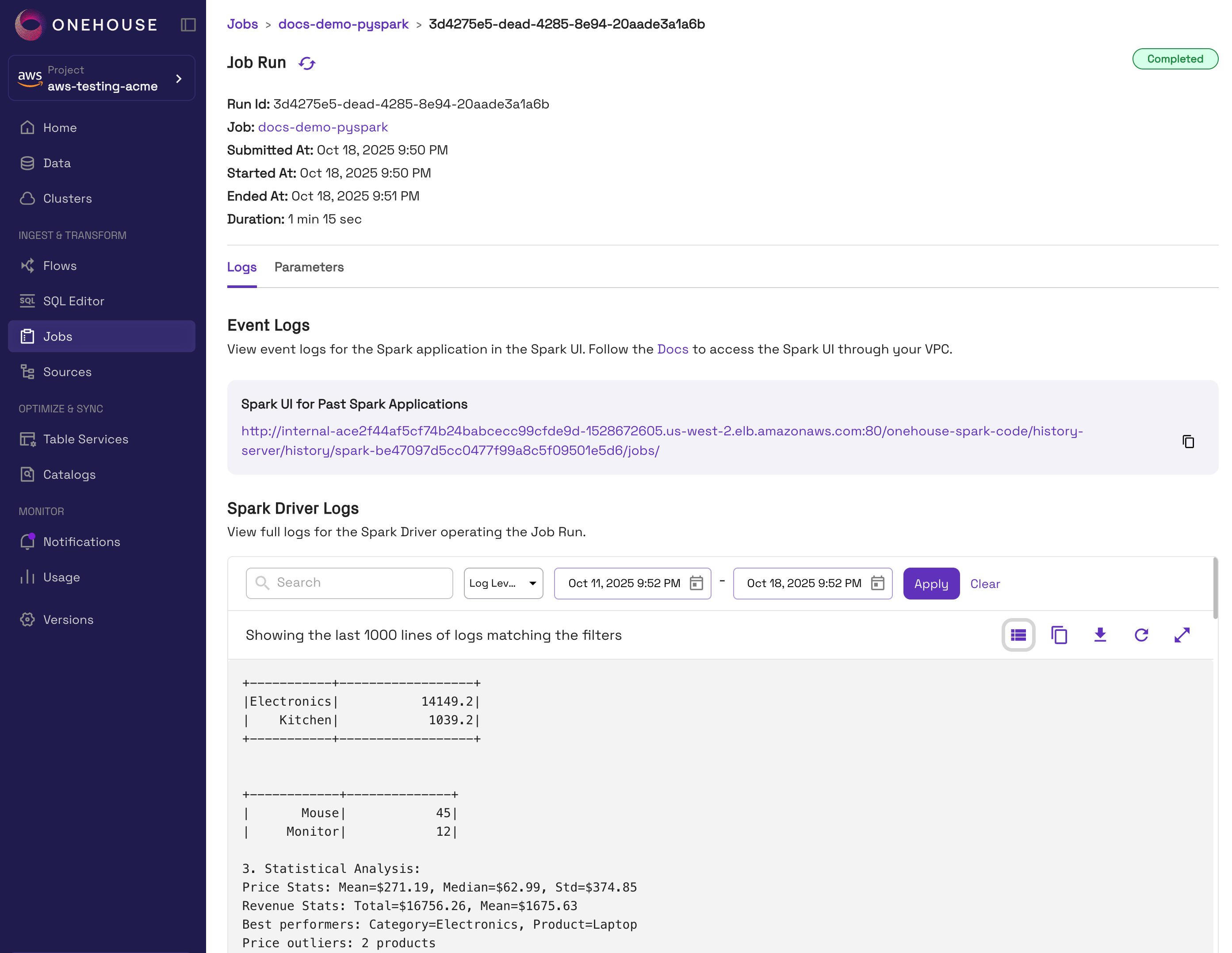

After the Job is created, you can trigger a run. Learn more about running Jobs here.

- In the Onehouse console, navigate to the Jobs page.

- Open the Job you'd like to run, then click Actions > Run. You can also use the

RUN JOBAPI command. - When the Job starts running, you can view the driver logs in the Onehouse console by clicking into the Job run. Read more here on monitoring Job runs.

Configuring Python Job parameters

You can configure the parameters for your Job as a JSON array of strings. You should include the required parameters, and be sure to add parameters in the proper order.

| Order | Parameter | Description |

|---|---|---|

| 1 | --conf | Optional Spark configuration properties (can be repeated) |

| 2 | /path/to/script | The path to your executable .py file in Cloud Storage (required) |

| 3 | arg1, arg2, … | Arguments passed to your Python script |

Example:

[

"--conf","spark.archives=/path/to/venv.tar.gz#environment"

"--conf", "spark.pyspark.python=./environment/bin/python"

"--conf", "spark.executor.memory=4g",

"--conf", "spark.executor.cores=2",

"/path/to/my-spark-script.py",

"arg1", "--flag1"

]

Required parameters

The following properties are required:

- The cloud storage bucket path containing the python script

- Setting spark.pyspark.python as ./environment/bin/python

- Configurations for virtual environments if you are including package dependencies. See more here.

Additional parameters

Optionally, you can include any additional Apache Spark properties you'd like to pass to the Job. Currently, --conf is supported.

Follow these guidelines to ensure your Jobs run smoothly:

- Add any

spark.extraListenersin parameters. Do not add these in your code directly. - If you are setting

spark.driver.extraJavaOptionsorspark.executor.extraJavaOptions, you must add"-Dlog4j.configuration=file:///opt/spark/log4j.properties". - Do not set the following Apache Spark configurations:

- spark.kubernetes.node.selector.*

- spark.kubernetes.driver.label.*

- spark.kubernetes.executor.label.*

- spark.kubernetes.driver.podTemplateFile

- spark.kubernetes.executor.podTemplateFile

- spark.metrics.*

- spark.eventLog.*

Managing Package Dependencies

You may install Python package for Jobs by packaging them in a virtual environment. Dependencies are installed by each Job's driver, rather than at the Cluster-level, to prevent dependency conflicts between Jobs running on the same Cluster.

IMPORTANT: PySpark Requirement

You must install PySpark for your Python Jobs to run successfully. We suggest version 3.5.2+.

Pre-Installed Packages

The following package come pre-installed on every 'Spark' Cluster:

- Apache Spark 3.5.2

- Apache Hudi 0.14.1

- Apache Iceberg 1.10.0 (only if the Cluster uses an Iceberg REST Catalog)

Do not include these package in your virtual environment to keep the size small and prevent version conflicts.

Installing Packages for Python Jobs

To provide Python packages for your Job, you can perform the following steps:

- Create a Python virtual environment (venv).

- Install all necessary package dependencies within your venv.

- Package the venv into into a compressed archive (eg.

venv.tar.gz) and upload it to your object storage bucket.

Guides for each of these steps are available in the quickstart above.

Onehouse Clusters may run on different CPU architectures from your local machine. We recommend using Docker buildx to build your virtual environment using the same architecture as your Onehouse Cluster.

For the standard Onehouse instance families:

oh-generalfamily instances on AWS use the arm64 architecture.oh-generalfamily instances on GCP use the amd64 architecture.

Referencing Python Dependencies in Jobs

In your Job parameters, you must specify Apache Spark configurations to load the venv containing your Python package dependencies.

Example:

["--conf", "spark.archives=<path-to-venv>#environment", "--conf", "spark.pyspark.python=./environment/bin/python", "script.py"]