Datadog

Overview

Onehouse offers a Datadog integration that allows you to monitor your Onehouse deployment using Datadog's powerful monitoring and observability tools.

Set up Datadog

Enabling the Datadog Agent

During Onehouse onboarding, you will be asked to select "enable Datadog" in the deployment Terraform config.yaml or CloudFormation template. This will give Onehouse the necessary permissions to install the Datadog Agent on your behalf.

Configuring the Datadog Agent

The Datadog Agent will be installed on the Onehouse-managed Kubernetes cluster. The agent can then collect metrics from the Onehouse deployment. In order to install the agent, please file a support ticket with the Onehouse team. The Onehouse team will install the agent and configure it to collect the appropriate metrics.

Datadog API Key

The Datadog Agent will need a Datadog API key to send metrics to Datadog. You will need to add your API key to your AWS Secrets Manager under the name onehouse-datadog-api-key with the following tag: accessibleTo=onehouse.

Datadog Dashboard

The Datadog Agent will be configured to send metrics to Datadog. The list of metrics available is configurable by the Onehouse team. Please share any specific metrics you would like to see in the Datadog app with the Onehouse team in your support ticket. You can view the metrics by navigating to the Datadog app and selecting the "Metrics" tab. Here you will see metrics from the Onehouse EKS cluster populated. You can now create your own dashboards and alerts based on these metrics. In addition to this, you can also configure Spark Jobs monitoring by adding the Datadog configs any Spark Job you run.

Onehouse Jobs Monitoring

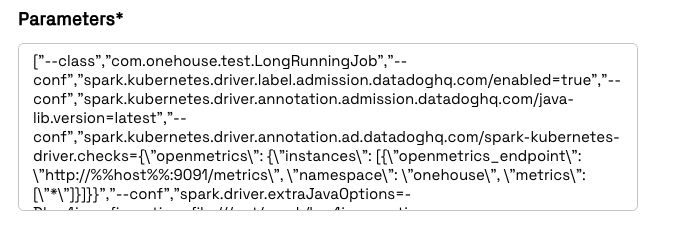

You can monitor your Onehouse Spark Jobs in Datadog using APM Spark Jobs Monitoring by adding the Datadog configs to your Spark Job. In order to do this, you will need to edit and add the following configs to your Spark Job:

spark.kubernetes.driver.label.admission.Datadoghq.com/enabled=true

spark.kubernetes.driver.annotation.admission.Datadoghq.com/java-lib.version=latest

spark.kubernetes.driver.annotation.ad.Datadoghq.com/spark-kubernetes-driver.checks={"openmetrics": {"instances": [{"openmetrics_endpoint": "http://%%host%%:9091/metrics", "namespace": "onehouse", "metrics": ["*"]}]}}

spark.driver.extraJavaOptions=-Dlog4j.configuration=file:///opt/spark/log4j.properties -Ddd.integration.spark.enabled=true -Ddd.service=<YOUR SPARK SERVICE> -Ddd.env=<DEV/PROD> -Ddd.version=1.0 -Ddd.tags=job:<YOUR SPARK JOB NAME>,env:<dev/prod>

You can add the configs above directly to the Spark Job configs via the Onehouse UI or the Onehouse API.