Credential Management

Onehouse Credential Management enables users to securely store, update and delete credentials required to access for Stream Capture operations. Supported credential management options:

- Onehouse Managed Secrets(OMS)

- Bring Your Own Secrets(BYOS)

Onehouse Managed Secrets(OMS)

Credentials are securely stored by Onehouse. Users input relevant credential information like username/password, API keys etc. on Onehouse UI for adding a source or a catalog.

Onehouse project uses Onehouse Managed Secrets by default, this is determined by “credential_management_type=OMS” config in the terraform scripts.

Refer ‘Link Your Account(AWS, GCP)’ for details on terraform configurations.

Credential lifecycle management

NOTE: Currently, users are expected to pause the streams before making changes to the Secret used in the source configured for the stream. Stream to be Resumed after the change is done.

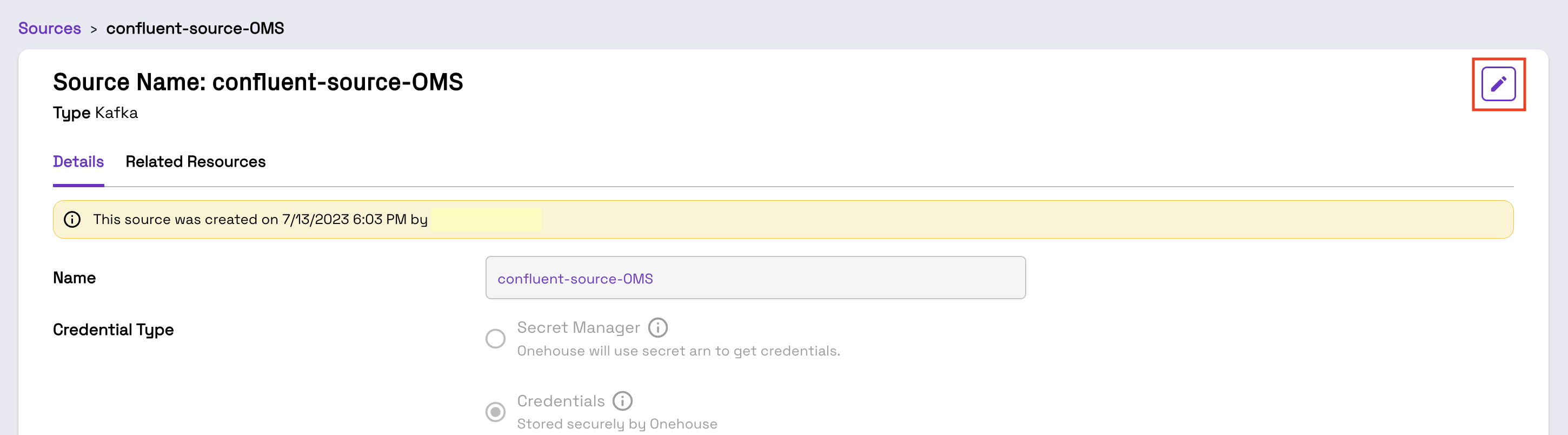

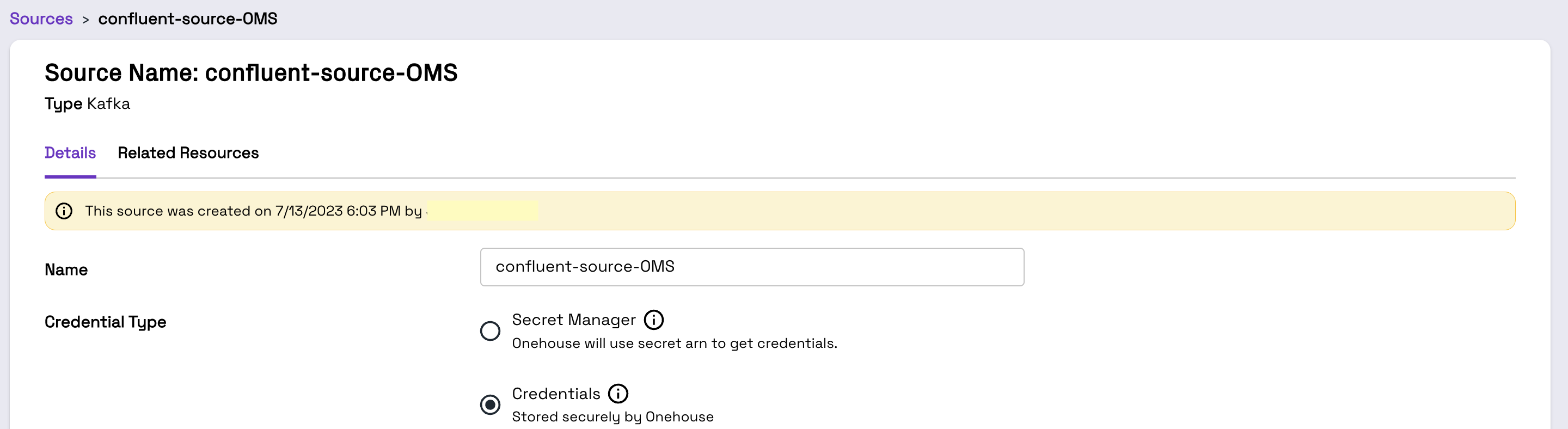

To update the credentials like username/password or key rotation use Onehouse Source edit flow.

Credential Type and relevant fields are enabled for editing on clicking the edit button.

Deleting a source also deletes all credentials used in that source.

Usage

Use “Credentials” option under “Credential Type” in the Add/edit Source flow.

Bring your own secrets(BYOS)

Users store credentials as Secrets in the Secret Manager of the linked cloud account, i.e., AWS Secret Manager or Google Cloud Secret Manager. Use AWS Secret Manager Console or Google Cloud Secret Manager console to create a new secret. And, input Secret Resource Name on Onehouse UI via Source Creation Flows.

Secrets for different sources/resources are expected to be stored in a specified JSON format described here.

It is recommended to use BYOS, which can be configured by “credential_management_type=BYOS” config in the terraform scripts. Onehouse gke_node_role(GCP) and eks_node_role(AWS) will be additionally granted read permissions to Secrets stored in the Secret Manager of the linked cloud account during the onboarding process via terraform scripts. Read permissions are limited to:

- All Secrets with secret name prefix “onehouse-” in the linked GCP cloud account

- All Secrets with tag ‘accessibleTo’ set to ‘onehouse’ in the linked AWS cloud account

Credential lifecycle management

NOTE: Currently, users are expected to pause the streams before making changes to the Secret used in the source configured for the stream. Stream to be Resumed after the change is done.

Secret Edit and Rotation

You can modify or rotate your BYOS secrets by following these steps:

- First we recommend you pause the Stream Captures that will be affected by the secret rotation.

- To update credentials, like username/password or key rotation use the Secret Manager management console of the linked cloud account(AWS or GCP).

- If required, navigate to the Onehouse Source where the secret was configured, click edit and update the Secret Resource Name.

- Update the credentials and click save.

- Resume any Stream Captures that you may have previously paused.

Secret Deletion

You can delete the BYOS secrets by following these steps:

- Before deleting a secret, please ensure that sources using the secret are updated/deleted otherwise corresponding Stream Capture operations will be impacted.

- To delete credentials, delete the Secret using the Secret Manager management console of the linked cloud account.

Usage

Use “Secret Manager” option under “Credential Type” in the Add/edit Source flow.

Additional Details

- By default, Onehouse Managed Secrets(OMS) is enabled, and users will see permission errors if they try to use BYOS.

- If Bring Your Own Secret(BYOS) is configured for your Onehouse project via terraform scripts, users will be able to use OMS or BYOS.

- After source addition, credentials such as username/password, API keys etc. are shown as masked values in Source details and edit flows.

Limitation

- Datahub metastore Auth token cannot be updated from Onehouse UI. Please contact Onehouse support iv auth token update is needed.

Secrets JSON format for Bring your own secret(BYOS)

Kafka with SASL

Depending on the distribution of kafka, use api_key/api_secret or username/password as username and password below. For Apache Kafka source with SASL, provide API key and API secret as values for username and password.

{

"username":"<value>",

"password":"<value>"

}

Kafka with TLS

{

"keystore_password":"<value>",

"key_password":"<value>"

}

Postgres or MySQL Database Credentials

Used in Postgres or MySQL Database Credentials

{

"username":"<value>",

"password":"<value>"

}

Confluent Account

Confluent account credentials used in Confluent CDC source.

{

"key":"<value>",

"secret":"<value>"

}

Confluent Schema Registry

{

"api_key":"<value>",

"api_secret":"<value>"

}

Datahub Metastore

{

"auth_token":"<value>"

}