Onehouse Table Format Conversion and Catalog Sync

This guide outlines the steps to convert an existing Apache Hudi table to Apache Iceberg, and synchronize the data to the Snowflake Catalog. This process includes guaranteeing file visibility for Onehouse and performing a metadata synchronization job utilizing both the Onetable (Xtable) catalog and the Databricks Unity Catalog.

Note: Table format conversion and catalog synchronization features requires Apache Hudi >0.14

Steps

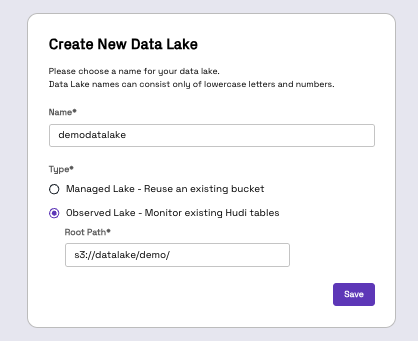

- Follow Onehouse documentation to create a new data lake with type observed lake at https://docs.onehouse.ai/docs/product/manage-data/external-tables. This will allow Onehouse to monitor the Apache Hudi tables.

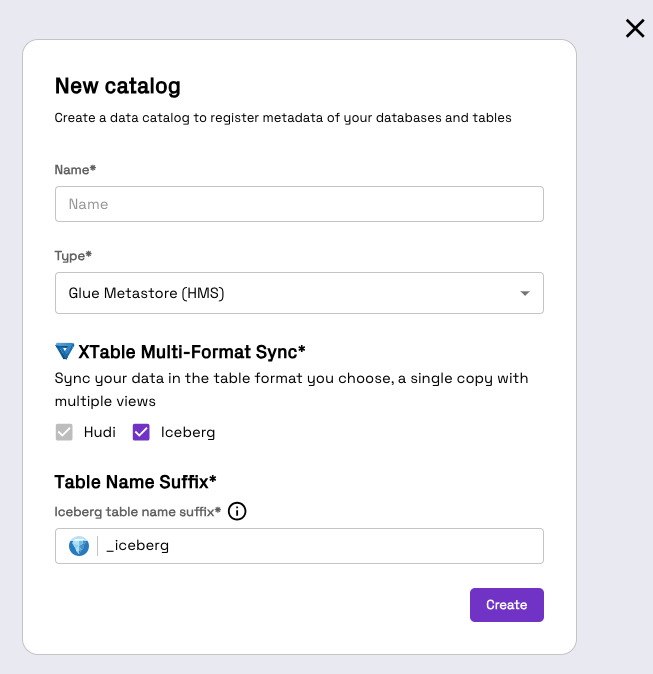

- Create one or more catalogs. For example, create a new catalog of type “Glue” with Apache Iceberg selected and choose the suffix string for the table name that will be registered in Glue.

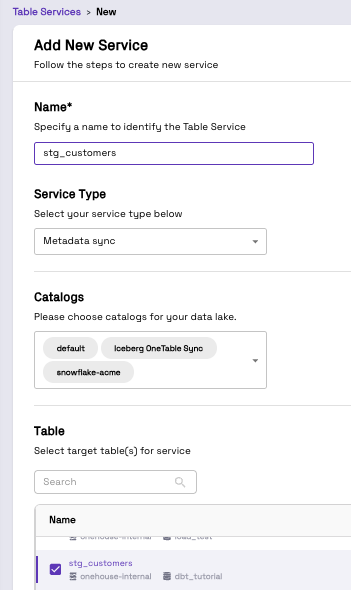

- Create a new table service of type “Metadata Sync” and choose catalog(s). For this example, we picked the Glue / default, Snowflake and Onetable catalog. This will create a job that will automatically trigger after every commit from your Hudi writers. (See triggering mechanism description below for details)

Onehouse table service triggering mechanism

Onehouse automated triggering mechanism is designed to modularly adapt to your pipeline speed. Onehouse monitors the Hudi metadata for commit messages and as soon as we see a commit (configurable down to seconds level granularity) from your writers we immediately trigger our table service operation. Onehouse handles retries natively if there are failures in the Metadata Sync operation.