Onehouse Cross Account Data Access

At times, Onehouse customers may want to read data from sources in other AWS accounts or write to data lakes in other AWS accounts via the Onehouse product. One of Onehouse’s core architectural principles is that data never leaves the customer’s VPC. However, in the case where a customer wants to intentionally read or write data outside of the VPC in which the Onehouse product is deployed, this guide shows the steps to achieve this desired outcome.

Network Setup Changes

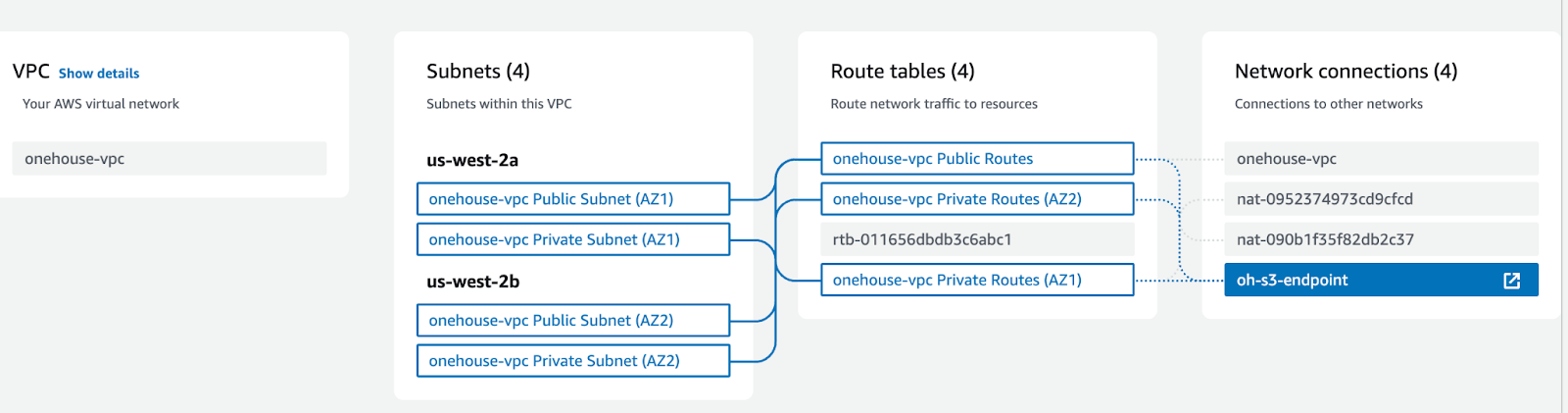

In order to minimize cost for cross account data transfers, Onehouse recommends that customers set up an S3 gateway endpoint that is connected to the two private subnets within the VPC that the Onehouse EKS cluster is deployed. The steps to set up the S3 endpoint are listed below:

- Navigate to the VPC AWS console, select endpoints on the left selector

- Create Endpoint. Search for S3 as the service, and select the gateway endpoint

- Specify the VPC that your EKS cluster is in for the S3 endpoint

- Attach your endpoint to the route-tables for your private subnets

In the end you should have a network setup that looks something like this:

AWS Access Role Change

The next step is to grant the AWS roles the needed permissions to perform cross account access. In this guide, we roughly follow the steps found in part 1 of this article by AWS.

Step 1: Add your S3 bucket to the Onehouse permissions

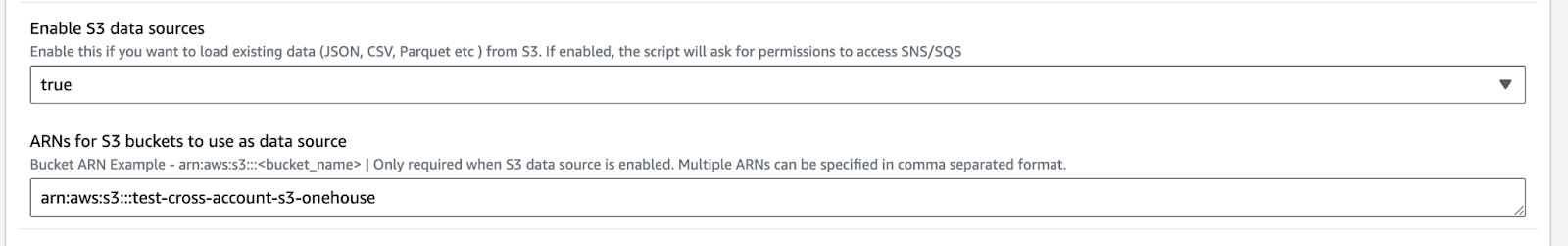

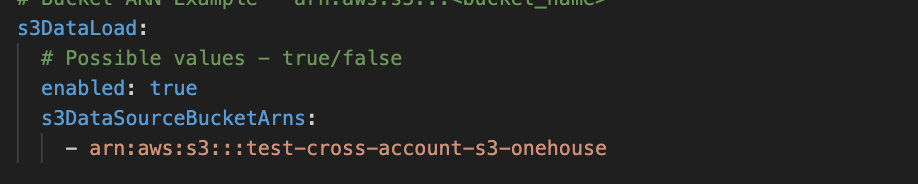

In either your Cloudformation or Terraform, enable S3DataSource and add your S3 bucket arn to the provided field. Listed below are the screenshots for both the Cloudformation and Terraform interfaces to add this.

Cloudformation:

Enable S3 data sources to True and add your S3 ARN in the Cloudformation parameters

Terraform:

Set this parameter to True in your config.yaml and add your S3 ARN

Step 2: Add Onehouse Cluster permissions the S3 bucket Now, we need to add the permissions to the S3 bucket that will allow the Onehouse cluster to read from it. Listed below are the steps to do this

- Navigate to your source AWS account and to you S3 bucket

- In the permissions tab of your S3 bucket, add the below policy (after following item 3) to your bucket access policy

- Fill in the values for these 3 variables in the below policy

- ONEHOUSE CLUSTER’S ACCOUNT #

- YOUR REQUEST ID PREFIX - this will be attached all onehouse IAM roles and your cluster

- SOURCE S3 BUCKET ARN

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AccessToDataSourceBucket",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-eks-node-role-<YOUR REQUEST ID PREFX>",

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-core-role-<YOUR REQUEST ID PREFX>"

]

},

"Action": [

"s3:GetBucketNotification",

"s3:PutBucketNotification",

"s3:ListBucket",

"s3:ListBucketVersions",

"s3:GetBucketVersioning"

],

"Resource": "<CROSS ACCOUNT S3 BUCKET ARN>"

},

{

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-eks-node-role-<YOUR REQUEST ID PREFX>",

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-core-role-<YOUR REQUEST ID PREFX>"

]

},

"Action": [

"s3:GetObject",

"s3:GetObjectVersionAttributes",

"s3:GetObjectRetention",

"s3:GetObjectAttributes",

"s3:GetObjectTagging",

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": "<CROSS ACCOUNT S3 BUCKET ARN>/*"

}

]

}

Step 3 (Optional): If the s3 buckets are encrypted using any KMS keys, add following statement in the corresponding KMS key's policy

- Navigate to your source AWS account and to you KMS key.

- Check Key Policy tab. If JSON policy is not visibile already,

Switch to policy view. - Fill in the values for these 3 variables in the key policy

- ONEHOUSE CLUSTER’S ACCOUNT #

- YOUR REQUEST ID PREFIX - this will be attached all onehouse IAM roles and your cluster

- CROSS ACCOUNT KMS KEY ARN

{

"Sid": "Allow OneHouse access to this key",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-eks-node-role-<YOUR REQUEST ID PREFX>",

"arn:aws:iam::<ONEHOUSE CLUSTER'S ACCOUNT NUMBER>:role/onehouse-customer-core-role-<YOUR REQUEST ID PREFX>"

]

},

"Action": [

"kms:Decrypt",

"kms:GenerateDataKey"

],

"Resource": "<CROSS ACCOUNT KMS KEY ARN>"

}

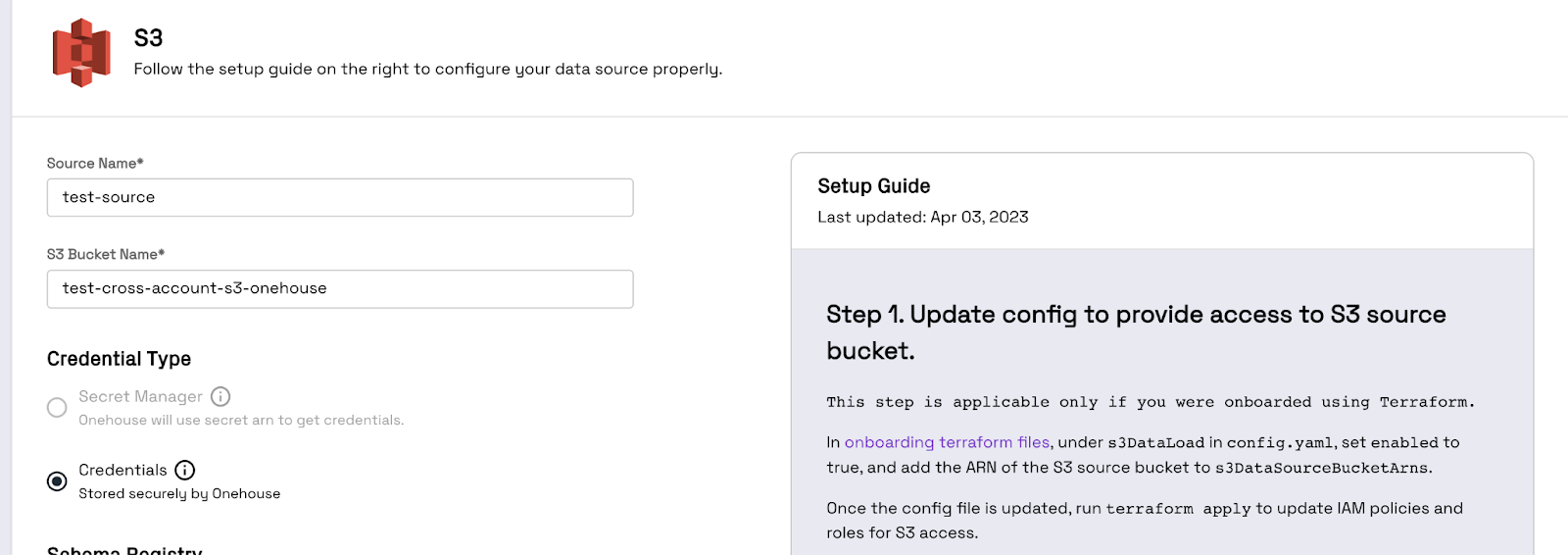

Step 4: Set up your S3 source

You can now go through the process of setting up your S3 source as normal and proceed with setting up stream captures that read from this S3 bucket!

Conclusion

Following this guide, Onehouse users can now read from S3 buckets that are in different accounts from the one that their Onehouse EKS cluster is deployed in. For any questions on this guide or any of the following related topics, please reach out to the Onehouse solutions team at solutions@onehouse.ai.

- Writing to a data lake in a different account

- IAM role setup

- Multi region S3 reads/writes

- …. And any other questions!