AWS MSK Kafka

Description

Continuously stream data directly from AWS Managed Apache Kafka (MSK) into your Onehouse managed lakehouse.

Follow the setup guide within the Onehouse console to get started. Click Sources > Add New Source > MSK Kafka.

Pre-requisites

Ensure that you have granted permission for MSK in the Terraform or CloudFormation configurations when you connected your cloud account.

Reading Kafka Messages

Onehouse supports the following serialization types for Kafka message values:

| Message Value Serialization Type | Schema Registry | Description |

|---|---|---|

| Avro | Required | Deserializes message value in the Avro format. Send messages using Kafka-AVRO specific libraries, vanilla AVRO libraries will not work. Read more on this article: Deserialzing Confluent Avro Records in Kafka with Spark |

| JSON | Optional | Deserializes message value in the JSON format. |

| JSON_SR (JSON Schema) | Required | Deserializes message value in the Confluent JSON Schema format. |

| Protobuf | Required | Deserializes message value in the Protocol Buffer format. |

| Byte Array | N/A | Passes the raw message value as a Byte Array without performing deserialization. Also adds the message key as a string field. |

Onehouse currently does not support reading Kafka message keys for Avro, JSON, JSON_SR, and Protobuf serialized messages.

How to Configure AWS VPC Peering and Test Connectivity

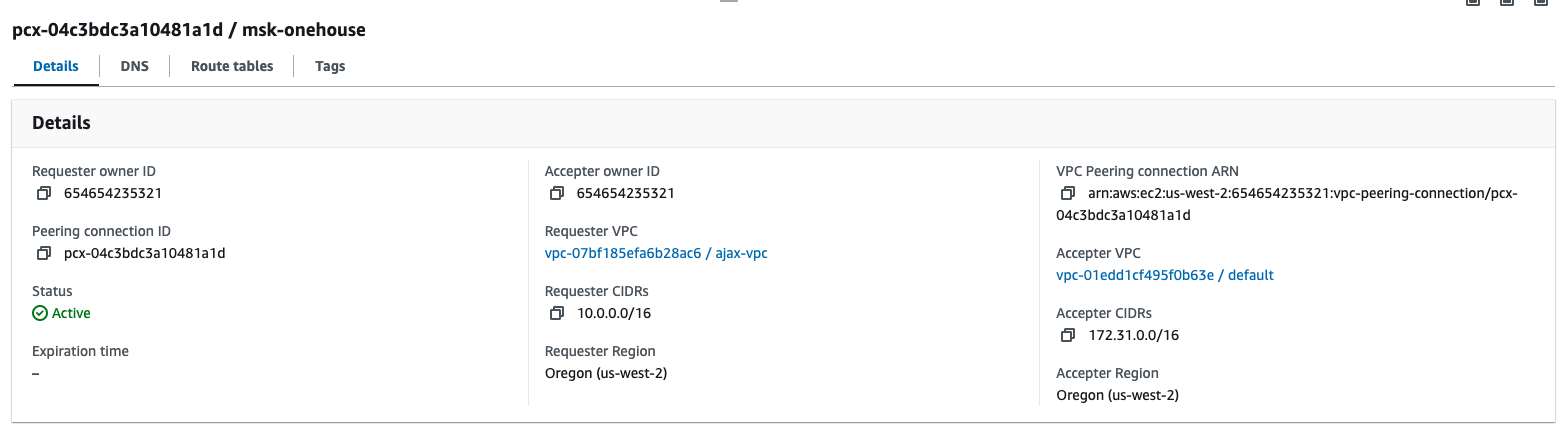

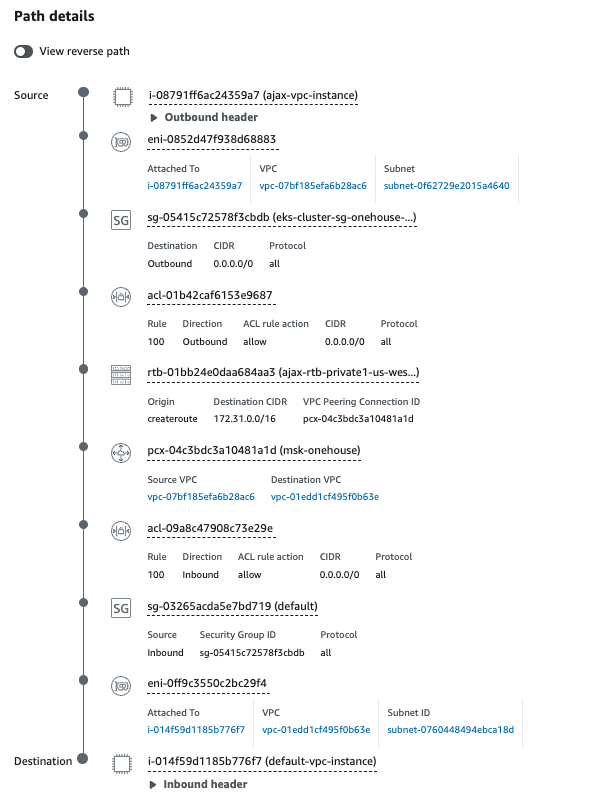

- Create a VPC Peering entry between the requesting VPC (Onehouse) and the accepter VPC (your MSK cluster).

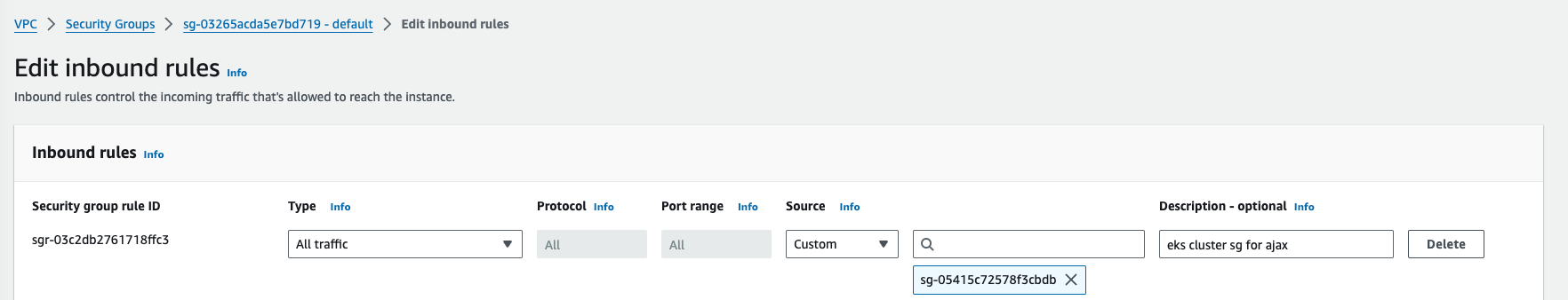

- Modify the MSK security group to allow "All Traffic" from the Onehouse EKS security group.

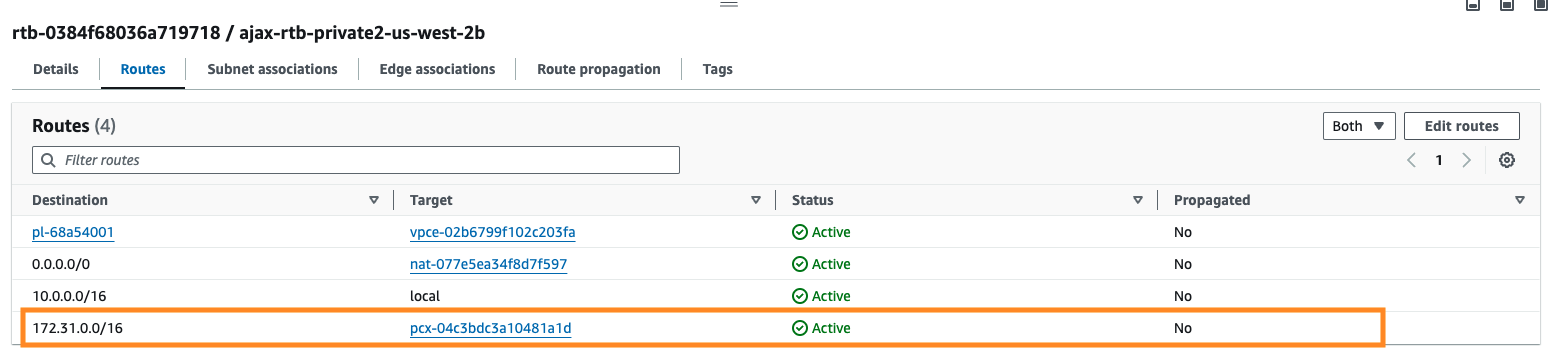

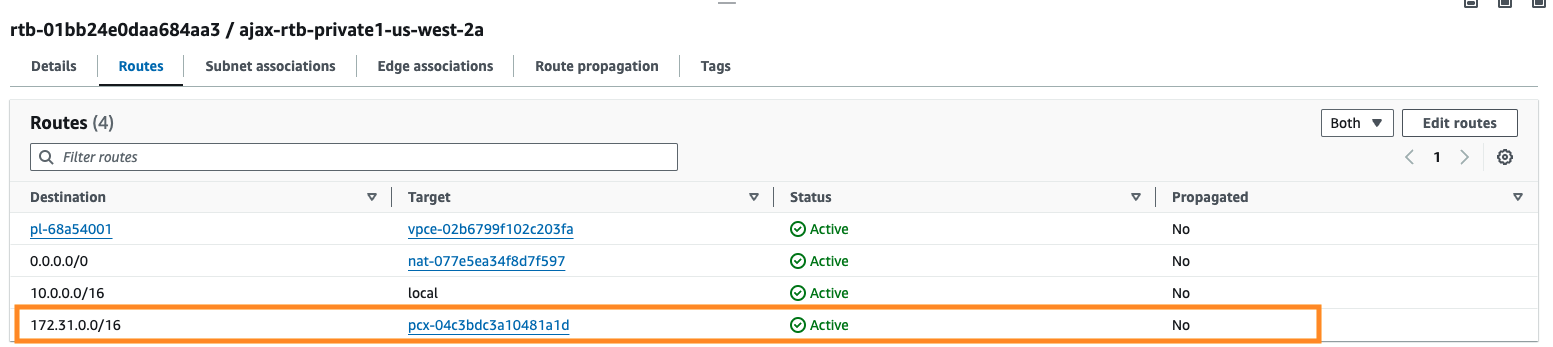

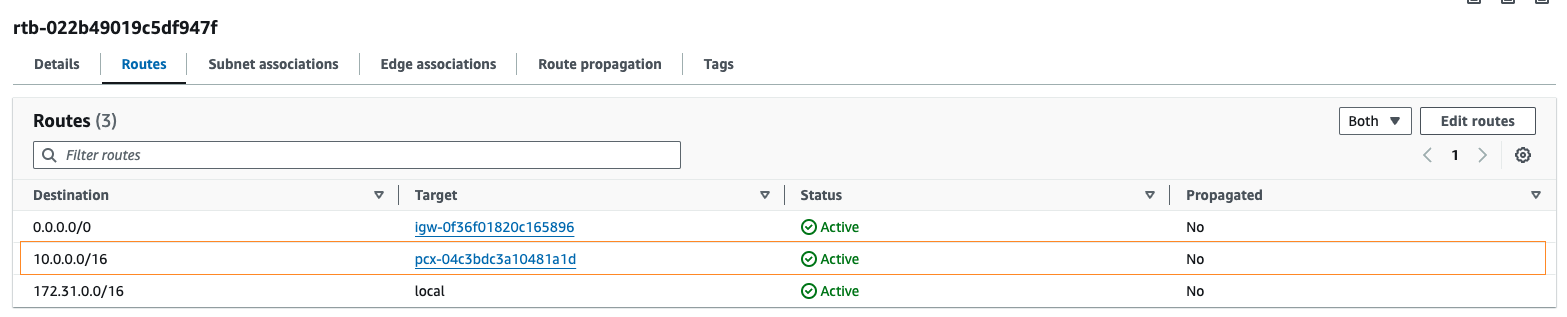

- Modify the Route Table for all the EKS VPCs and add a route to the MSK VPC CIDR.

- Modify the Route Table for the MSK VPC and add a route to the EKS VPC CIDR.

-

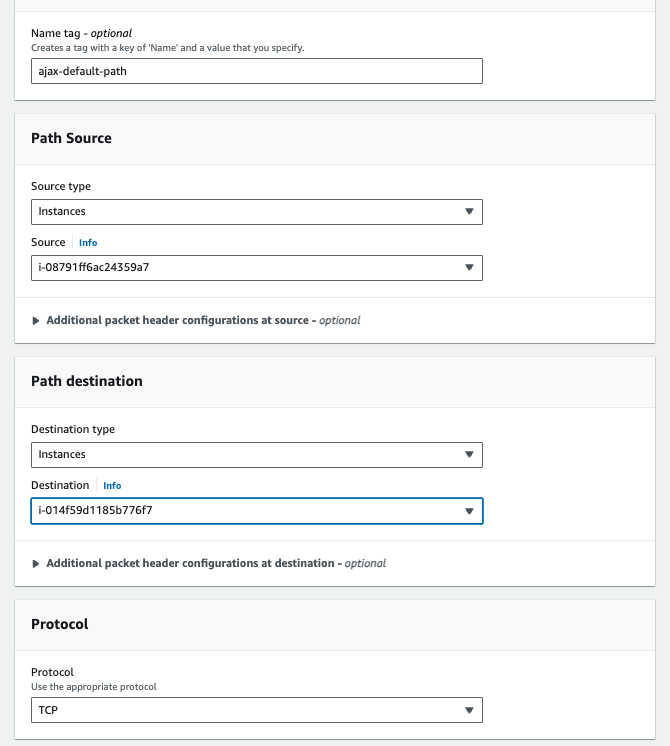

Create one EC2 instance in each VPC. EC2-1 mimics the connection from Onehouse and is in one of the private subnets in the EKS VPC. The security group(s) should be the same as the ones attached to EKS cluster. EC2-2 mimics where the Kafka instance is deployed and is in one of the private subnets (or public subnet) where you deployed Kafka. The security group(s) should be the same as the ones attached to Kafka.

-

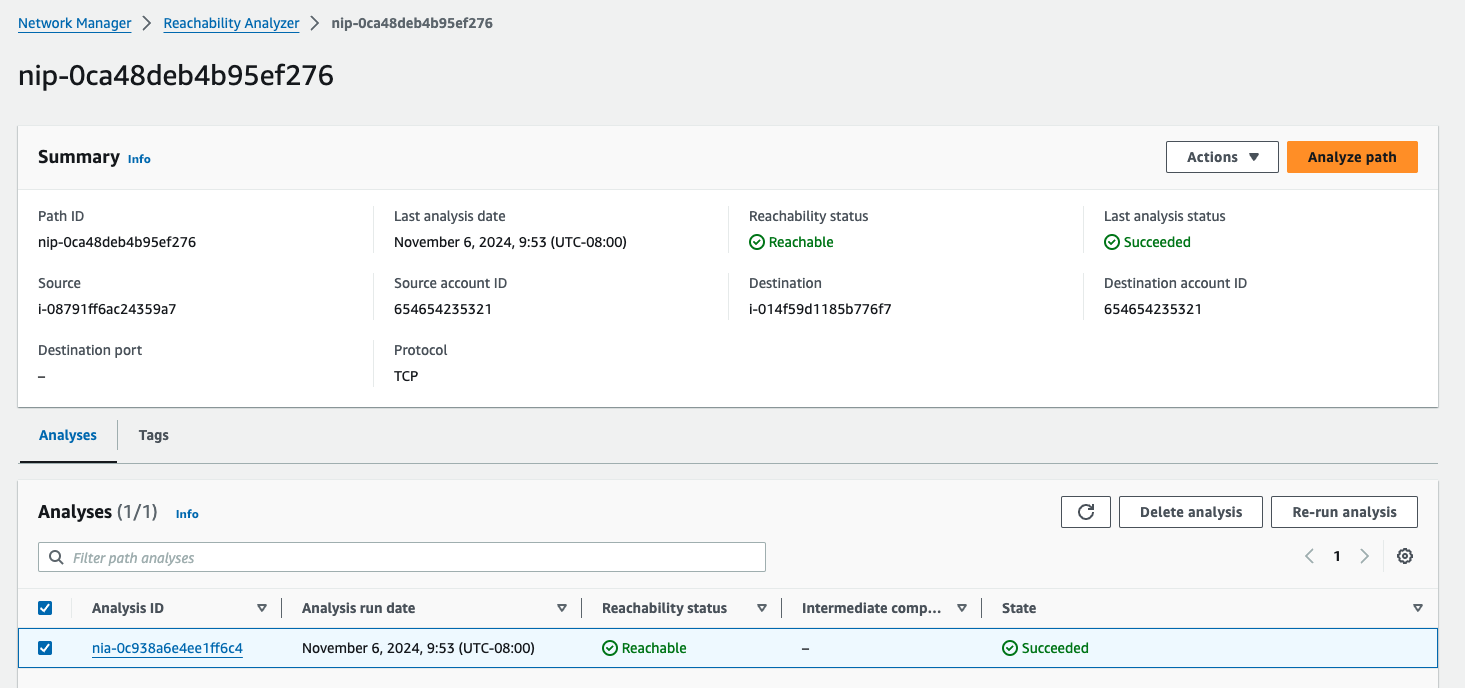

Create a test path in AWS's Reachability Analyzer between those instance

- Analyze Path

Usage Notes

- If a message is compacted or deleted within the Kafka topic, it can no longer be ingested since the payload will be a tombstone/null value.

- Ensure your MSK Cluster is in the same region as the Onehouse project. This will reduce costs for moving data across regions.