Onboarding Prerequisites

Prerequisites

You'll need a few things before you get started. Make sure each of these are set up correctly before you link your cloud provider in Onehouse.

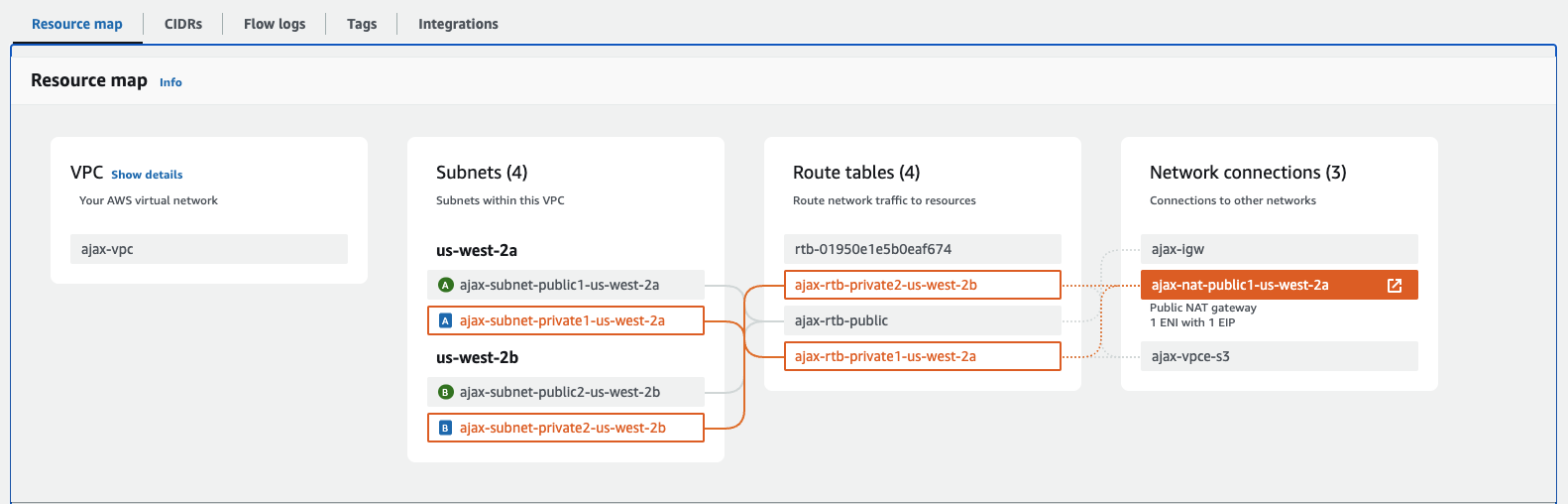

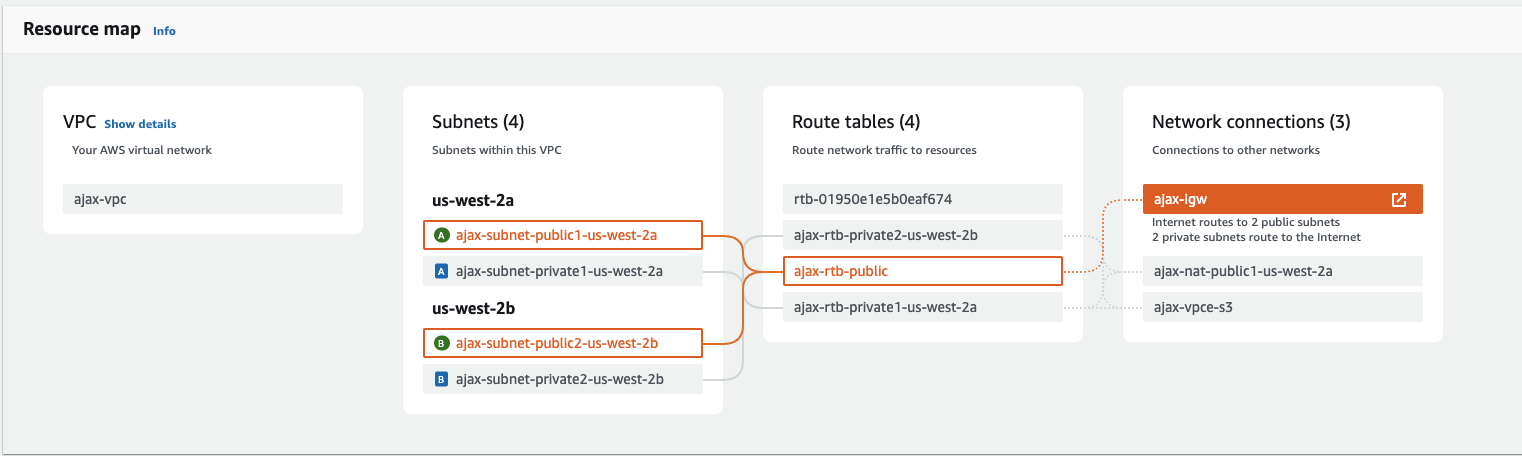

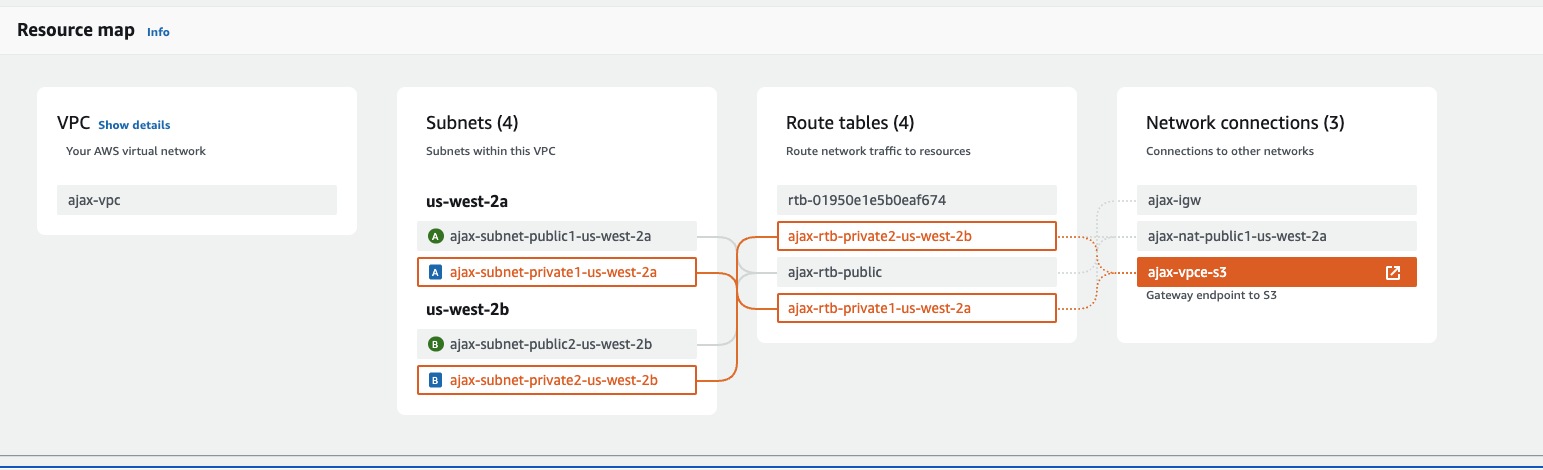

Virtual Private Cloud (VPC)

You'll need an AWS VPC with following resources:

- AWS VPC: A virtual private cloud for isolating your resources with a range of /16 or /20.

- Public/Private Subnets: Two public and two private subnets.

- Private Subnets must be within the range of /17 to /20.

- Public Subnets can have a range smaller than private subnet. Only the NAT Gateway will be deployed in the public subnet.

- AWS docs for configuring this can be found here.

- Availability Zones: At least 2 Availability Zones. The EKS cluster will be deployed in the private subnet.

- S3 VPC endpoint: If one doesn't exist already to avoid using NAT for S3 to EKS communication.

- NAT Gateway: A network address translation service enabling instances in private subnets to connect to the internet while preventing inbound traffic. Nat Gateway docs can be found here.

- EKS and Onehouse control plane communication will go through NAT Gateway.

- Public/Private Subnets: Two public and two private subnets.

By deploying the NAT gateway in a single AZ, you'll establish a basic foundation for your network environment. However, consider adding additional NAT gateways in separate AZs for enhanced redundancy and fault tolerance.

Deployment using CloudFormation

You can download this CloudFormation template and use it to deploy a VPC with the resources listed above to create the required environment for a seamless Onehouse onboarding. Here are some things to note:

- NAT Gateway must be created in a public subnet.

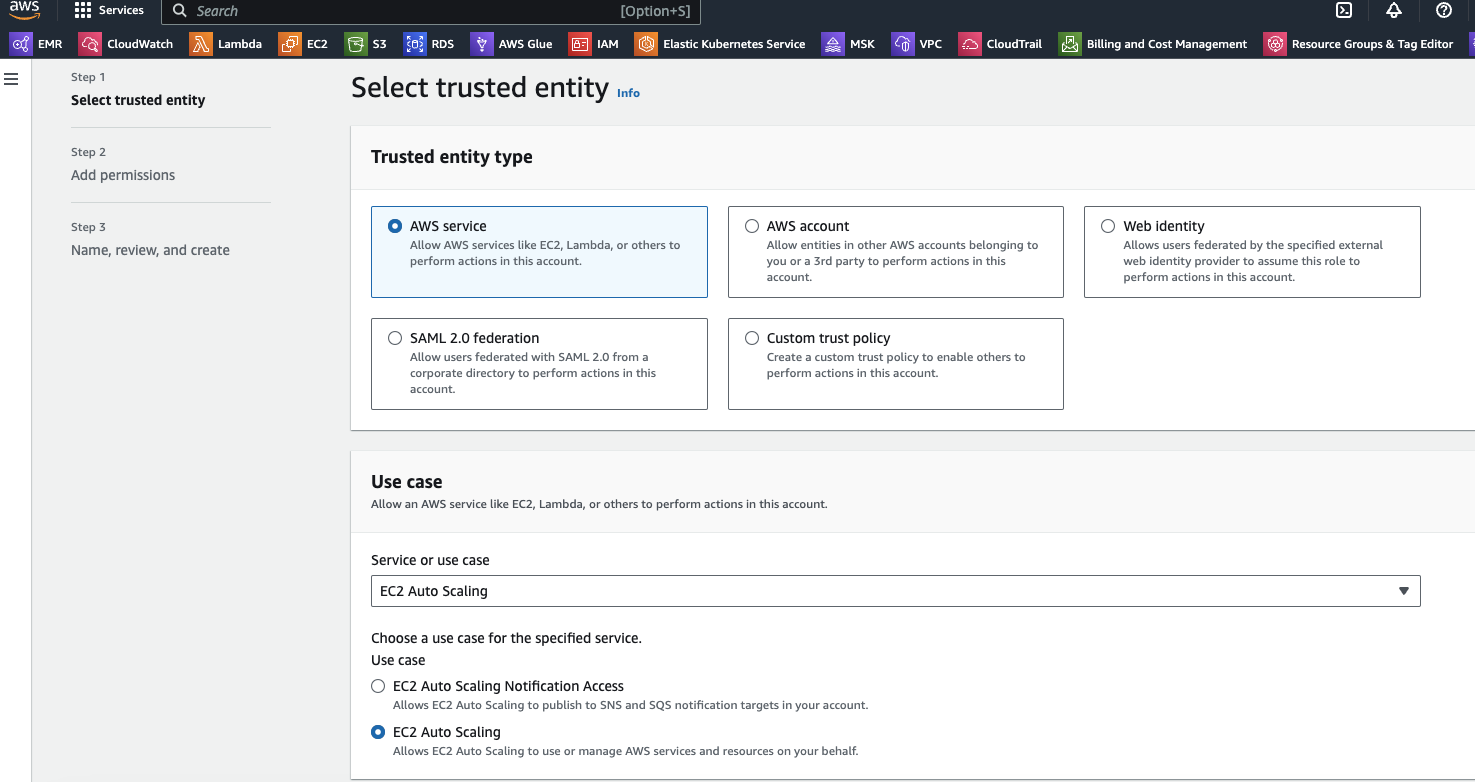

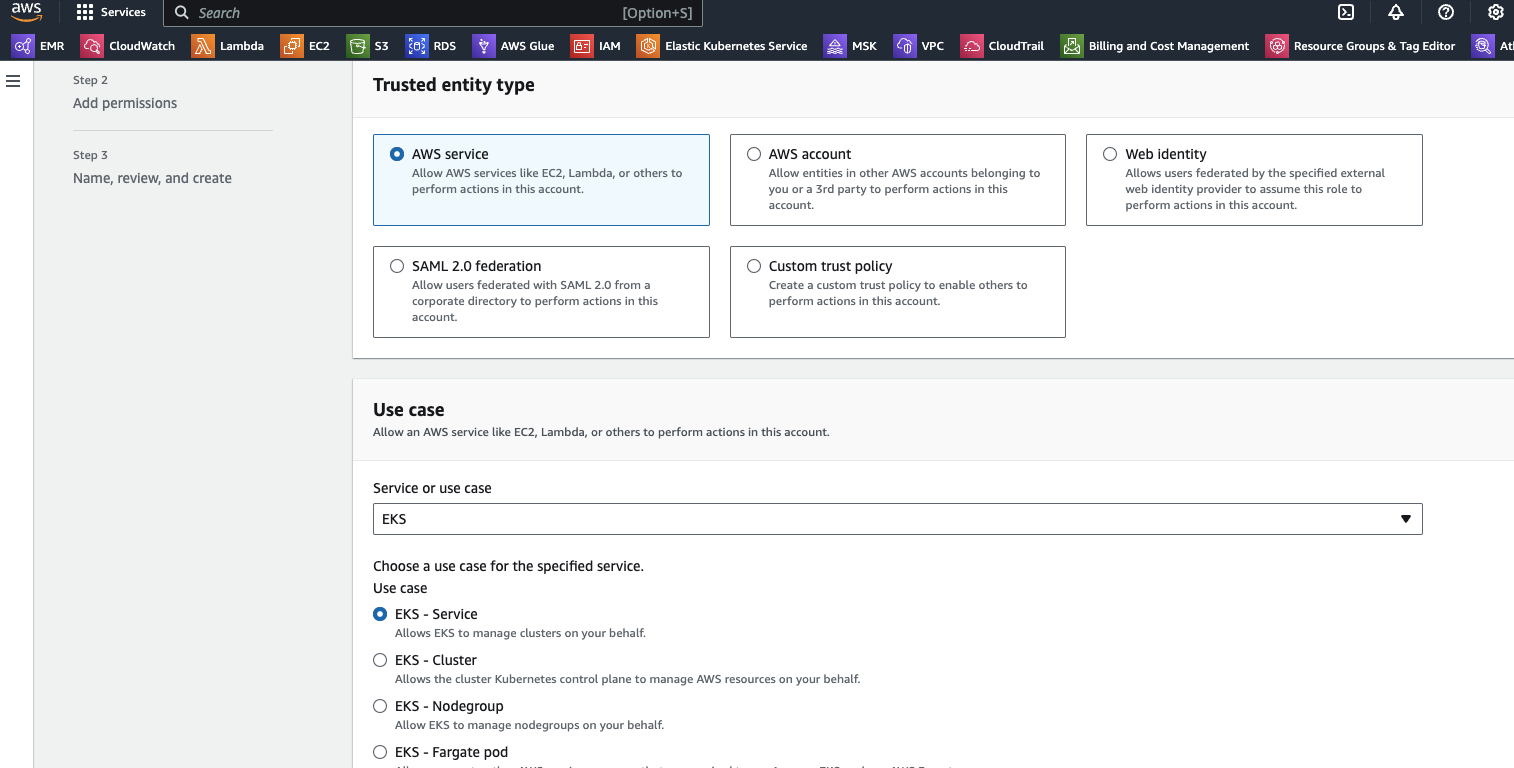

IAM Service Roles for Amazon EKS

Make sure that AWSServiceRoleForAmazonEKSNodegroup, AWSServiceRoleForAmazonEKS, and AWSServiceRoleForAutoScaling all exist in your account. If it does not exist, please follow the below steps to create it.

- For the EKS role: go to IAM roles, select the create role option, select "EKS", select "EKS - Service" and create the role.

- For the EKS Node Group role: go to IAM roles, select the create role option, select "EKS", select "EKS - Nodegroup" and create the role.

- For the EC2 Auto Scaling role: go to IAM roles, select the create role option, select "EC2 Auto Scaling", then "EC2 Auto Scaling" and and create the role.

Gateway Endpoints for Amazon S3 - Access to Image Registries

You need to add the following buckets that are used by registries such as Docker, K8s registry and Quay.io. Onehouse leverages these registries for managing and pulling images of the various microservices deployed in your account. Recently, these registries have started using S3 (AWS Cloudfront) to distribute images for AWS environments in addition to the Cloudflare CDN.

{

"Version": "2012-10-07",

"Id": "...",

"Statement": [

{

"Sid": "stmtAllowDockerImages",

"Effect": "Allow",

"Principal": "*",

"Action": "*",

"Resource": [

"arn:aws:s3:::docker-images-prod/*",

"arn:aws:s3:::prod-<AWS_REGION>-starport-layer-bucket/*",

"arn:aws:s3:::quayio-production-s3/*",

"arn:aws:s3:::prod-registry-k8s-io*"

]

}

]

}

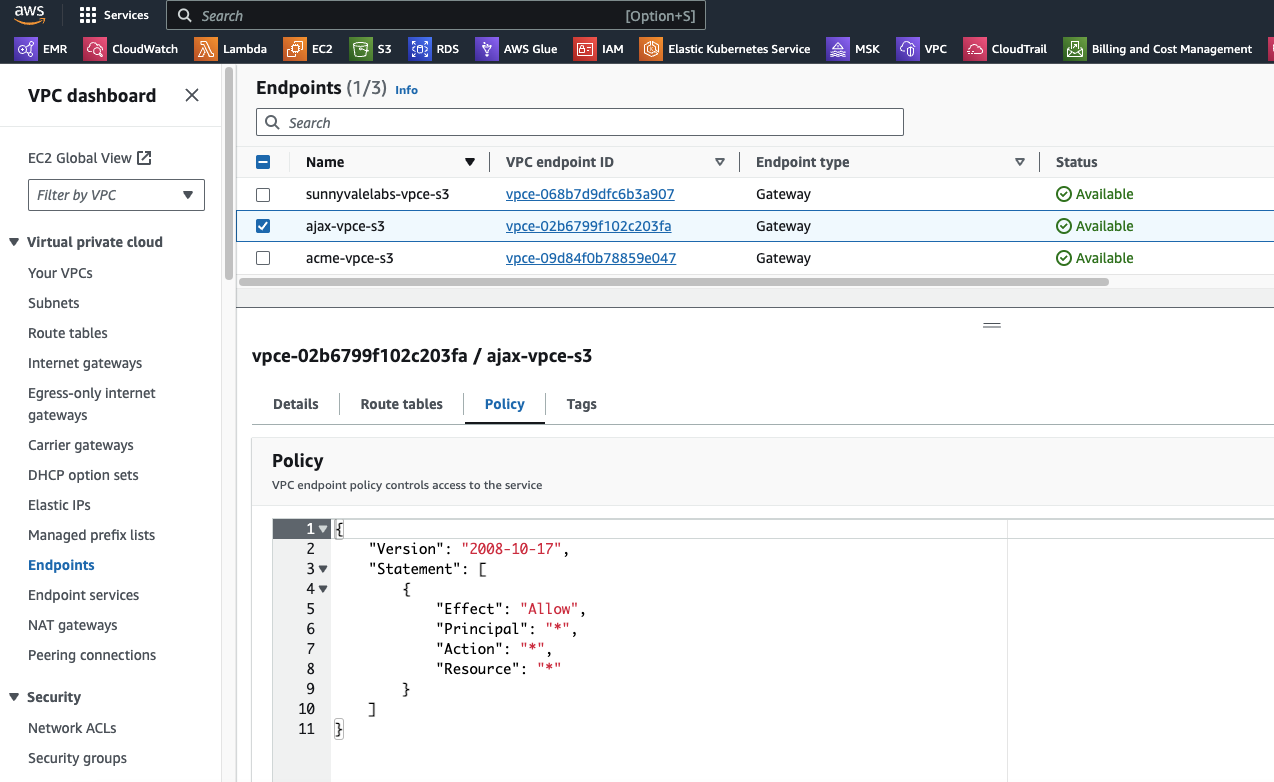

Gateway Endpoints for Amazon S3 - Access to Onehouse Buckets

Since Onehouse offers a managed ingestion service, we will be reading/writing data into S3 buckets. So we need to make sure that there are gateway endpoints between your VPC and S3 to ensure that network traffic does not flow through the NAT gateway. AWS docs for setting this up can be found here

If you are additionally overriding your VPC endpoint policy that allows access to all S3 buckets, please make sure you add/ edit the existing policy to allow the following bucket that is used by Onehouse to store configs, logs etc. in your AWS account. Also, please add all the buckets that are used by the Lake to store the Onehouse tables.

{

"Version": "...",

"Id": "...",

"Statement": [

{

"Sid": "Allow-access-to-onehouse-bucket-and-lakes",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::onehouse-customer-bucket-XXXX",

"arn:aws:s3:::onehouse-customer-bucket-XXXX/*",

"arn:aws:s3:::<lake-bucket>",

"arn:aws:s3:::<lake-bucket>/*",

"arn:aws:s3:::s3-datasource-metadata-<ONEHOUSE_REQUEST_ID>",

"arn:aws:s3:::s3-datasource-metadata-<ONEHOUSE_REQUEST_ID>/*"

]

}

]

}

Note: The above policies are simply examples. Please modify or add to your existing policy based on your company policy and requirements.

AWS S3 bucket to store your data lakehouse

A storage bucket is required for your data lakehouse. Data is encrypted by default using Amazon S3 managed keys (SSE-S3).

Create an S3 Bucket:

- Create a new Amazon S3 bucket that will store your data.

[Optional] AWS S3 bucket to store your data lakehouse with Customer Managed Encryption (SSE-KMS)

These optional steps describe how to encrypt your data in the data lakehouse with your own keys. Before proceeding, you must first create a standard AWS S3 bucket and complete the onboarding process. After these prerequisites are met, you can continue with the steps outlined here. Be aware that the encryption duration can vary significantly, from minutes to hours, depending on the size of your data lakehouse.

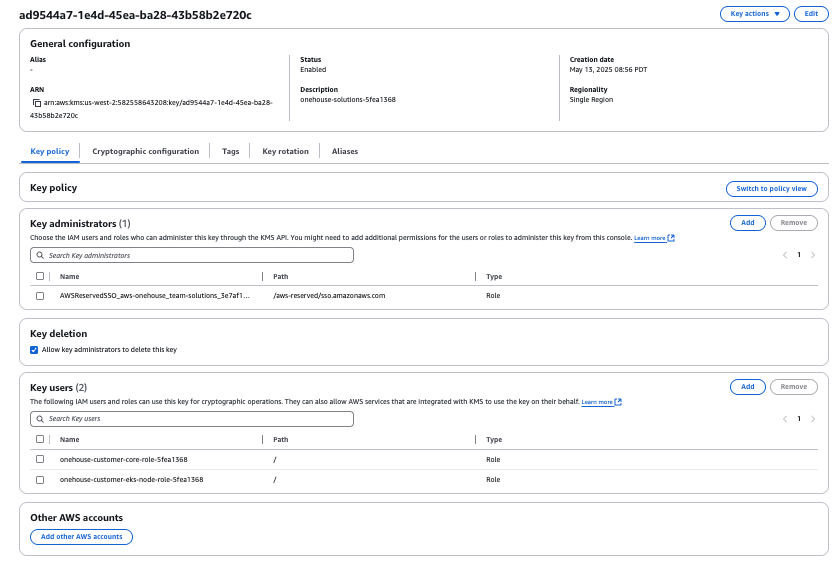

Create an AWS KMS Key:

- Use the AWS Key Management Service (KMS) to create a new key.

- Ensure the IAM roles onehouse-customer-eks-node-role-XXXX and onehouse-customer-core-role-XXXX are added as KMS key users. If these roles do not exist, create a standard AWS S3 bucket, complete the onboarding process, and then repeat these steps.

Configure Customer Managed Encryption KMS Key in Onehouse onboarding:

- Terraform: Update the s3KmsKeys parameter with the ARN (Amazon Resource Name) of your KMS key and run the terrafrom again.

- AWS CloudFormation: In the "KMS keys configuration," specify the ARN of your KMS key and run the wizard again.

- Use of Customer Managed Encryption does not require you to change your current Onehouse Secret Manager setting in Onehouse onboarding.

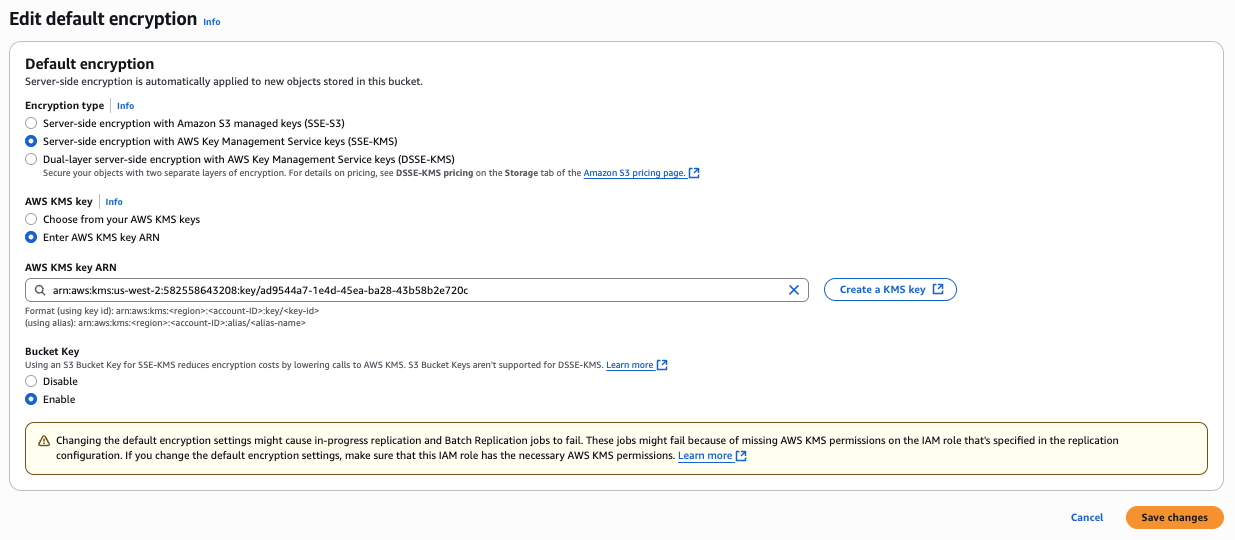

Modify the S3 Bucket to add Customer Managed Encryption:

- Navigate to the "Default encryption" settings of your previously created bucket.

- Select "Server-side encryption with AWS Key Management Service keys (SSE-KMS)".

- Choose the KMS key you created earlier to encrypt the bucket, select enable for "bucket key" and save the changes. This will automatically encrypt all new files uploaded to the bucket.

- To encrypt existing files, select all files in the bucket.

- Go to "Actions" and click "Edit server-side encryption".

- Select "SSE-KMS", enter the ARN of your encryption key, select enable for "bucket key" and click "Save Changes". This will apply SSE-KMS encryption to all your current files.

Enabling the bucket key for SSE-KMS can reduce AWS KMS request costs by up to 99 percent by decreasing the request traffic from Amazon S3 to AWS KMS.

VPC peering

The Onehouse stack will need network access to read from your data sources (e.g. Kafka Clusters/DBs). Please make sure that VPC peering is set up properly. Additional AWS docs on how to do this can be found here

List of allowed domains

If your company operates in a highly-regulated industry, and your AWS environment is protected by an egress firewall, then the following list of domains needs to be allowlisted in order for the Onehouse data plane to function:

Domains

Screenshots of AWS Networking after completition of configurations

Prerequisite Check Script

After ensuring all prerequisites are correctly implemented, you can execute the following script aws_permissions_check.sh and uris.txt on an Amazon Linux VM (for example amazon/al2023-ami-2023.7.20250414.0-kernel-6.1-x86_64 or any AMI that has aws cli and docker cli installed) within your VPC. The script's output should closely resemble the provided example, though minor variations are expected. For instance, the software's functionality is not strictly dependent on the presence of a NAT gateway.

sh-5.2$ /tmp/aws.sh [VPC-ID] [REGION] [ONEHOUSE_PROJECT_REQUEST_ID]

Starting VPC and infrastructure checks for VPC: [VPC-ID] 10.0.0.0/16 in the region: us-west-2

INFO: Checking DNS Resolution and DNS Hostname Attributes in the VPC: [VPC-ID] ...

✔ DNS Resolution is enabled for VPC [VPC-ID]

✔ DNS Hostnames is enabled for VPC [VPC-ID]

✔: S3 VPC Endpoint found - [ENDPOINT-ID]

INFO: Checking subnet: [SUBNET-ID-1]...

Availability zone: us-west-2b

CIDR Block: 10.0.16.0/20

✔ Available IP Address Count 4089

✔ Route table [ROUTE-TABLE-ID-1] has target VPC endpoint [ENDPOINT-ID].

✔ Route table [ROUTE-TABLE-ID-1] has no target NAT gateways

Is a public subnet.

⚠ No NAT associated with the subnet

INFO: Checking subnet: [SUBNET-ID-2]...

Availability zone: us-west-2a

CIDR Block: 10.0.64.0/18

✔ Available IP Address Count 15692

✔ Route table [ROUTE-TABLE-ID-2] has target VPC endpoint [ENDPOINT-ID].

✔ Route table [ROUTE-TABLE-ID-2] has target NAT gateways - [NAT-ID]

Is a private subnet

✔ No NAT associated with the subnet

INFO: Checking subnet: [SUBNET-ID-3]...

Availability zone: us-west-2a

CIDR Block: 10.0.0.0/20

✔ Available IP Address Count 4070

✔ Route table [ROUTE-TABLE-ID-1] has target VPC endpoint [ENDPOINT-ID].

✔ Route table [ROUTE-TABLE-ID-1] has no target NAT gateways

Is a public subnet.

✔ NAT associated with the subnet - [NAT-ID]

INFO: Checking subnet: [SUBNET-ID-4]...

Availability zone: us-west-2b

CIDR Block: 10.0.128.0/18

✔ Available IP Address Count 16343

✔ Route table [ROUTE-TABLE-ID-2] has target VPC endpoint [ENDPOINT-ID].

✔ Route table [ROUTE-TABLE-ID-2] has target NAT gateways - [NAT-ID]

Is a private subnet

✔ No NAT associated with the subnet

✔: Private subnets exist across at least two AZs - us-west-2a us-west-2b

✔: NAT gatway exists in atleast one public subnet - [NAT-ID]

INFO: Checking required onehouse roles

✔ IAM role - onehouse-customer-core-role-5fea1368 exists.

✔ IAM role - onehouse-customer-csi-driver-role-5fea1368 exists.

✔ IAM role - onehouse-customer-eks-node-role-5fea1368 exists.

✔ IAM role - onehouse-customer-eks-role-5fea1368 exists.

✔ IAM role - onehouse-customer-support-role-5fea1368 exists.

✔ IAM role - AWSServiceRoleForAutoScaling exists.

✔ IAM role - AWSServiceRoleForAmazonEKS exists.

✔ IAM role - AWSServiceRoleForAmazonEKSNodegroup exists.

INFO: Checking image registries accessibility

Pulling the image - Docker Hub

✔ Registry - Docker Hub is accessible

Pulling the image - Kubernetes

✔ Registry - Kubernetes is accessible

Pulling the image - Quay

✔ Registry - Quay is accessible

✔: All checked image registries are accessible

✔: VPC and infrastructure check completed successfully

Starting whitelist connectivity test for URIs in 'uris.txt'...

---------------------------------------------------------

Testing URI: amazonaws.com

Testing connectivity to: amazonaws.com ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: googleapis.com

Testing connectivity to: googleapis.com ... Connected (HTTP 404).

-> Likely whitelisted (connection successful).

Testing URI: docker.io

Testing connectivity to: docker.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: cloudfront.net

Testing connectivity to: cloudfront.net ... Failed to connect.

-> Potential whitelist issue or connection blocked.

Testing URI: onehouse.ai

Testing connectivity to: onehouse.ai ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: onehouse.dev

Testing connectivity to: onehouse.dev ... Failed to connect.

-> Potential whitelist issue or connection blocked.

Testing URI: ecr.aws

Testing connectivity to: ecr.aws ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: gcr.io

Testing connectivity to: gcr.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: quay.io

Testing connectivity to: quay.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: k8s.io

Testing connectivity to: k8s.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: kubernetes.io

Testing connectivity to: kubernetes.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: pkg.dev

Testing connectivity to: pkg.dev ... Connected (HTTP 302).

-> Likely whitelisted (connection successful).

Testing URI: monitoring.coreos.com

Testing connectivity to: monitoring.coreos.com ... Failed to connect.

-> Potential whitelist issue or connection blocked.

Testing URI: googleusercontent.com

Testing connectivity to: googleusercontent.com ... Connected (HTTP 404).

-> Likely whitelisted (connection successful).

Testing URI: google.com

Testing connectivity to: google.com ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: terraform.io

Testing connectivity to: terraform.io ... Connected (HTTP 308).

-> Likely whitelisted (connection successful).

Testing URI: hashicorp.com

Testing connectivity to: hashicorp.com ... Connected (HTTP 308).

-> Likely whitelisted (connection successful).

Testing URI: confluent.cloud

Testing connectivity to: confluent.cloud ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: kubeflow.github.io

Testing connectivity to: kubeflow.github.io ... Connected (HTTP 404).

-> Likely whitelisted (connection successful).

Testing URI: github.com

Testing connectivity to: github.com ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: githubusercontent.com

Testing connectivity to: githubusercontent.com ... Failed to connect.

-> Potential whitelist issue or connection blocked.

Testing URI: pagerduty.com

Testing connectivity to: pagerduty.com ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: cloudflare.docker.com

Testing connectivity to: cloudflare.docker.com ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: telemetry.onehouse.ai

Testing connectivity to: telemetry.onehouse.ai ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: gcr.io

Testing connectivity to: gcr.io ... Connected (HTTP 301).

-> Likely whitelisted (connection successful).

Testing URI: googlecloudplatform.github.io

Testing connectivity to: googlecloudplatform.github.io ... Connected (HTTP 200), but may not be fully accessible.

-> Potential whitelist issue or connection blocked.

Whitelist connectivity test completed.