Confluent Kafka

Continuously stream data directly from Confluent Kafka into Onehouse tables.

Click Sources > Add New Source > Confluent Kafka. Then, follow the setup guide within the Onehouse console to configure your source.

Cloud Provider Support

- AWS: ✅ Supported

- GCP: ✅ Supported

API Keys

To get started with Confluent Cloud, you'll need to create API keys for both Kafka and Schema Registry. When generating these keys, you have the flexibility to choose between your own account or a dedicated service account.

Using a Service Account

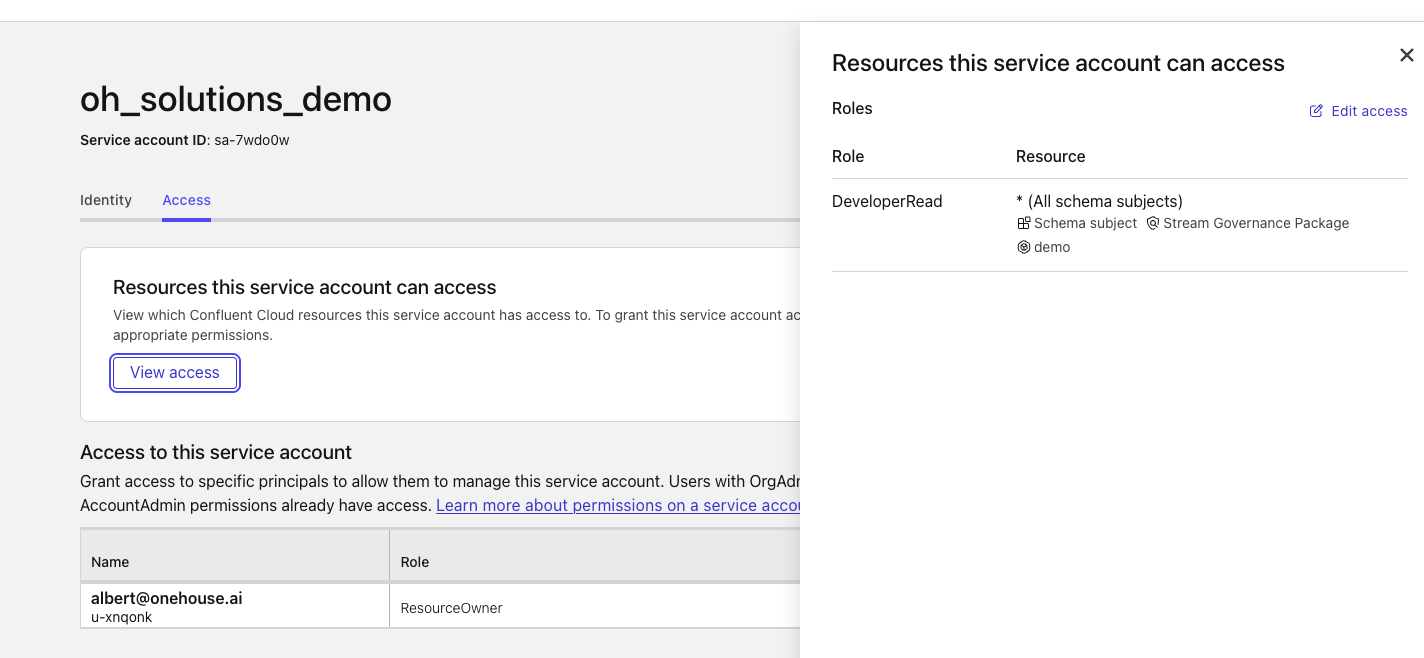

If you select a service account in Confluent for Schema Registry, it begins with no permissions. To enable access:

- In Confluent, navigate to Administration > Accounts & Access > Accounts > Service account

- Select the specific service account. Then navigate to Access > View access

- Assign the DeveloperRead role to the relevant schema.

- Similarly, if you choose a service account for Kafka, you'll need to explicitly grant the permissions

PREFIXED READ,PREFIXED DESCRIBE, andPREFIXED DESCRIBE_CONFIGSto the necessary topic.

Reading Kafka Messages

Onehouse supports the following serialization types for Kafka message values:

| Serialization Type (for message value) | Schema Registry | Description |

|---|---|---|

| Avro | Required | Deserializes message value in the Avro format. Send messages using Kafka-Avro specific libraries; vanilla AVRO libraries will not work. |

| JSON | Optional | Deserializes message value in the JSON format. |

| JSON_SR (JSON Schema) | Required | Deserializes message value in the Confluent JSON Schema format. |

| Protobuf | Required | Deserializes message value in the Protocol Buffer format. |

| Byte Array | N/A | Passes the raw message value as a Byte Array without performing deserialization. Also adds the message key as a string field. |

Onehouse currently does not support reading Kafka message keys for Avro, JSON, JSON_SR, and Protobuf serialized messages.

Usage Notes

- If a message is compacted or deleted within the Kafka topic, it can no longer be ingested since the payload will be a tombstone/null value.

- Onehouse offers support for Kafka transactions. However, the Confluent UI does not currently provide a way to monitor whether Kafka transactions are actively in use.

- If you're using Confluent Flink, Kafka transactions are enabled by default to ensure exactly-once processing. However, the Confluent UI does not currently offer a direct way to see if these transactions are actively being used.See https://docs.confluent.io/cloud/current/flink/concepts/delivery-guarantees.html for more information.

Secrets Management (BYOS)

If you are using Bring Your Own Secrets (BYOS), store your credentials in AWS Secrets Manager or Google Cloud Secret Manager using the JSON formats below. See the Secrets Management documentation for setup instructions and tag requirements.

SASL Protocol

Use your API key and API secret as the username and password.

{

"username": "<value>",

"password": "<value>"

}

TLS Protocol

{

"keystore_password": "<value>",

"key_password": "<value>"

}